Difference between revisions of "Improving Scene Labeling with Hyperspectral Data"

From iis-projects

m (→Short Description) |

m |

||

| (15 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

| − | [[File: | + | [[File:Hyperspectral.jpg|300px|thumb]] |

[[File:x1-adas.jpg|250px|thumb]] | [[File:x1-adas.jpg|250px|thumb]] | ||

[[File:Labeled-scene.png|200px|thumb]] | [[File:Labeled-scene.png|200px|thumb]] | ||

| − | == | + | ==Description== |

Hyperspectral imaging is different from normal RGB imaging in the sense that it does not capture the amount of light within three spectral bins (red, green, blue), but many more (e.g. 16 or 25) and not necessarily in the visual range of the spectrum. This allows the camera to capture more information than humans can process visually with their eyes and thus gives way to very interesting opportunities to outperform even the best-trained humans; in fact you can see it as a step towards spectroscopic analysis of materials. | Hyperspectral imaging is different from normal RGB imaging in the sense that it does not capture the amount of light within three spectral bins (red, green, blue), but many more (e.g. 16 or 25) and not necessarily in the visual range of the spectrum. This allows the camera to capture more information than humans can process visually with their eyes and thus gives way to very interesting opportunities to outperform even the best-trained humans; in fact you can see it as a step towards spectroscopic analysis of materials. | ||

| − | Recently, a novel hyperspectral imaging sensor has been presented [[https://vimeo.com/73617050 video], [http://www2.imec.be/content/user/File/Brochures/2015/IMEC%20HYPERSPECTRAL%20SNAPSHOT%20MOSAIC%20IMAGER%2020150421.pdf pdf]] and adopted in first industrial computer vision cameras [[http://www.ximea.com/en/usb3-vision-camera/hyperspectral-usb3-cameras link]]. These new cameras are only 31 grams w/o the lens as opposed to the old cameras which used complex optics with beam splitters, could not provide a large number of channels | + | Recently, a novel hyperspectral imaging sensor has been presented [[https://vimeo.com/73617050 video], [http://www2.imec.be/content/user/File/Brochures/2015/IMEC%20HYPERSPECTRAL%20SNAPSHOT%20MOSAIC%20IMAGER%2020150421.pdf pdf]] and adopted in first industrial computer vision cameras [[http://www.ximea.com/files/brochures/xiSpec-Hyperspectral-cameras-2015-brochure.pdf pdf], [http://www.ximea.com/en/usb3-vision-camera/hyperspectral-usb3-cameras link]]. These new cameras are only 31 grams w/o the lens as opposed to the old cameras which used complex optics with beam splitters, could not provide a large number of channels and were very heavy, ultra expensive and not mobile [[http://www.helimetrex.com.au/tetra.html link], [http://www.fluxdata.com/multispectral-cameras link]]. |

| − | We have acquired such a camera and would like to explore its use for image understanding/scene labeling/semantic segmentation (see labeled image). Your task would be to evaluate and integrate this camera into a working scene labeling system [http://dl.acm.org/citation.cfm?id=2744788 paper] and would be very diverse: | + | We have acquired such a camera and would like to explore its use for image understanding/scene labeling/semantic segmentation (see labeled image). Your task would be to evaluate and integrate this camera into a working scene labeling system [[http://dl.acm.org/citation.cfm?id=2744788 paper]] and would be very diverse: |

* create a software interface to read the imaging data from the camera | * create a software interface to read the imaging data from the camera | ||

* collect some images to build a dataset for evaluation (fused together with data from a high-res RGB camera) | * collect some images to build a dataset for evaluation (fused together with data from a high-res RGB camera) | ||

* adapt the convolutional network we use for scene labeling to profit from the new data (don't worry, we will help you :) ) | * adapt the convolutional network we use for scene labeling to profit from the new data (don't worry, we will help you :) ) | ||

| − | * create a system from the individual parts (build a case/box mounting the cameras, dev board, WiFi module, ...) and do some programming to make all work together smoothly and efficiently | + | * create a system from the individual parts (build a case/box mounting the cameras, dev board, WiFi module, ...) and do some programming to make it all work together smoothly and efficiently |

| − | * cross your | + | * cross your fingers, hoping that we will outperform all the existing approaches to scene labeling in urban areas |

| − | ===Status: | + | ===Status: Completed === |

| − | + | : Dominic Bernath | |

: Supervision: [[:User:Lukasc | Lukas Cavigelli]] | : Supervision: [[:User:Lukasc | Lukas Cavigelli]] | ||

| − | : Date: | + | : Date: Autumn Semester 2015 |

| − | + | [[Category:Software]] [[Category:System]] [[Category:Completed]] [[Category:Semester Thesis]] [[Category:2015]] | |

===Prerequisites=== | ===Prerequisites=== | ||

| Line 27: | Line 27: | ||

===Character=== | ===Character=== | ||

: 10% Literature Research | : 10% Literature Research | ||

| − | : | + | : 40% Programming |

: 20% Collecting Data | : 20% Collecting Data | ||

: 30% System Integration | : 30% System Integration | ||

| Line 36: | Line 36: | ||

==Detailed Task Description== | ==Detailed Task Description== | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

===Meetings & Presentations=== | ===Meetings & Presentations=== | ||

| Line 63: | Line 42: | ||

Around the middle of the project there is a design review, where senior members of the lab review your work (bring all the relevant information, such as prelim. specifications, block diagrams, synthesis reports, testing strategy, ...) to make sure everything is on track and decide whether further support is necessary. They also make the definite decision on whether the chip is actually manufactured (no reason to worry, if the project is on track) and whether more chip area, a different package, ... is provided. For more details confer to [http://eda.ee.ethz.ch/index.php/Design_review]. | Around the middle of the project there is a design review, where senior members of the lab review your work (bring all the relevant information, such as prelim. specifications, block diagrams, synthesis reports, testing strategy, ...) to make sure everything is on track and decide whether further support is necessary. They also make the definite decision on whether the chip is actually manufactured (no reason to worry, if the project is on track) and whether more chip area, a different package, ... is provided. For more details confer to [http://eda.ee.ethz.ch/index.php/Design_review]. | ||

---> | ---> | ||

| − | At the end of the project, you have to present/defend your work during a 15 min. presentation and 5 min. of discussion as part of the IIS colloquium. | + | At the end of the project, you have to present/defend your work during a 15 min. or 25 min. presentation and 5 min. of discussion as part of the IIS colloquium (as required for any semester or master thesis at D-ITET). |

<!-- | <!-- | ||

===Deliverables=== | ===Deliverables=== | ||

| Line 75: | Line 54: | ||

* project report (in digital form; a hard copy also welcome, but not necessary) | * project report (in digital form; a hard copy also welcome, but not necessary) | ||

---> | ---> | ||

| − | ===Timeline | + | <!-- |

| + | ===Timeline== | ||

To give some idea on how the time can be split up, we provide some possible partitioning: | To give some idea on how the time can be split up, we provide some possible partitioning: | ||

* Literature survey, building a basic understanding of the problem at hand, catch up on related work | * Literature survey, building a basic understanding of the problem at hand, catch up on related work | ||

| Line 82: | Line 62: | ||

* Implementation of data interfaces (software or hardware) | * Implementation of data interfaces (software or hardware) | ||

* Report and presentation | * Report and presentation | ||

| + | --> | ||

<!-- 13.5 weeks total here --> | <!-- 13.5 weeks total here --> | ||

| + | <!-- | ||

===Literature=== | ===Literature=== | ||

* Hardware Acceleration of Convolutional Networks: | * Hardware Acceleration of Convolutional Networks: | ||

| Line 90: | Line 72: | ||

** [http://cadlab.cs.ucla.edu/~cong/slides/fpga2015_chen.pdf] | ** [http://cadlab.cs.ucla.edu/~cong/slides/fpga2015_chen.pdf] | ||

* two not-yet-published papers by our group on acceleration of ConvNets | * two not-yet-published papers by our group on acceleration of ConvNets | ||

| − | + | F. Conti, L. Benini, "A Ultra-Low-Energy Convolution Engine for Fast Brain-Inspired Vision in Multicore Clusters", submitted to IEEE DAC'15, under review. --> | |

===Practical Details=== | ===Practical Details=== | ||

* '''[[Project Plan]]''' | * '''[[Project Plan]]''' | ||

* '''[[Project Meetings]]''' | * '''[[Project Meetings]]''' | ||

| − | |||

| − | |||

* '''[[Final Report]]''' | * '''[[Final Report]]''' | ||

* '''[[Final Presentation]]''' | * '''[[Final Presentation]]''' | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

[[#top|↑ top]] | [[#top|↑ top]] | ||

Latest revision as of 11:29, 5 February 2016

Contents

Description

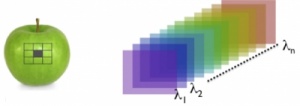

Hyperspectral imaging is different from normal RGB imaging in the sense that it does not capture the amount of light within three spectral bins (red, green, blue), but many more (e.g. 16 or 25) and not necessarily in the visual range of the spectrum. This allows the camera to capture more information than humans can process visually with their eyes and thus gives way to very interesting opportunities to outperform even the best-trained humans; in fact you can see it as a step towards spectroscopic analysis of materials.

Recently, a novel hyperspectral imaging sensor has been presented [video, pdf] and adopted in first industrial computer vision cameras [pdf, link]. These new cameras are only 31 grams w/o the lens as opposed to the old cameras which used complex optics with beam splitters, could not provide a large number of channels and were very heavy, ultra expensive and not mobile [link, link].

We have acquired such a camera and would like to explore its use for image understanding/scene labeling/semantic segmentation (see labeled image). Your task would be to evaluate and integrate this camera into a working scene labeling system [paper] and would be very diverse:

- create a software interface to read the imaging data from the camera

- collect some images to build a dataset for evaluation (fused together with data from a high-res RGB camera)

- adapt the convolutional network we use for scene labeling to profit from the new data (don't worry, we will help you :) )

- create a system from the individual parts (build a case/box mounting the cameras, dev board, WiFi module, ...) and do some programming to make it all work together smoothly and efficiently

- cross your fingers, hoping that we will outperform all the existing approaches to scene labeling in urban areas

Status: Completed

- Dominic Bernath

- Supervision: Lukas Cavigelli

- Date: Autumn Semester 2015

Prerequisites

- Knowledge of C/C++

- Interest in computer vision and system engineering

Character

- 10% Literature Research

- 40% Programming

- 20% Collecting Data

- 30% System Integration

Professor

Detailed Task Description

Meetings & Presentations

The students and advisor(s) agree on weekly meetings to discuss all relevant decisions and decide on how to proceed. Of course, additional meetings can be organized to address urgent issues. At the end of the project, you have to present/defend your work during a 15 min. or 25 min. presentation and 5 min. of discussion as part of the IIS colloquium (as required for any semester or master thesis at D-ITET).