Design of a High-performance Hybrid PTZ for Multimodal Vision Systems

From iis-projects

Contents

Overview

Dynamic Vision Sensors (DVS) or also called Event-based cameras can detect (when stationary placed) fast-moving and small objects and open-up tons of new possibilities for AI and tinyML. We are creating a completely new system, with an autonomous base station and distributed smart sensor nodes to run cutting-edge AI algorithms and perform novel sensor fusion techniques.

Project description

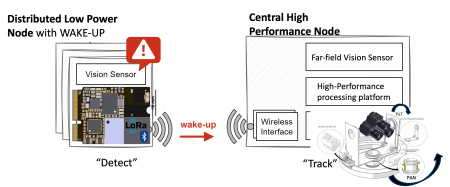

A new promising technology for detecting and tracking fast-moving objects is event cameras (DVS – dynamic vision sensors) often referred to as silicon retinas. Even though the underlying CMOS image technology is very similar to commercially available image sensors, instead of reporting each pixel's amplitude, the read-out circuitry reports each pixel’s change on an individual basis, creating events. While modern vision systems are widely based on RGB image sensors, they struggle in difficult lighting conditions when comparing with event cameras. Nevertheless, the movement of event cameras results in a lot of data created due to “artificial” movement of the image scenery. Further, the events created by moving objects nearly perish, while static event cameras are superior for detecting fast-moving objects in difficult lighting conditions. Literature shows the superior performance of event cameras compared to RGB cameras, while research is still being conducted to find promising new algorithms to deal with event data. We’re currently designing a new high-performance Pan-Tilt-Zoom (PTZ) unit for detecting and tracking of objects, making use of the new promising sensor technology while communicating with distributed ultra-low power vision nodes capable of generating alarms for the PTZ unit. The distributed nodes can send a location estimate of the detected object, such that an in-depth situation analysis can be performed by the PTZ unit equipped with NVIDIA Jetson Orin compute capabilities.

Your task in this project will be either on the PTZ and/or on the vision node and can be one or several out of the tasks mentioned below. Depending on your thesis (Semester/Master thesis), tasks will be assigned according to your interests and skills.

Tasks:

- Controller implementation: (New motor controller strategy for movement of event cameras)

- Embedded Firmware Design for the PTZ-Unit and/or the vision nodes up to full system integration

- New algorithms for detection and tracking with event-cameras

Prerequisites (not all needed!) depending of Tasks

- Embedded Firmware Design and experience in Free RTOS, Zephyr, etc…

- C-Code programming

- Mechanical/Circuit design tools (e.g. Altium)

- Experience in ML on MCU or deep knowledge of ML and strong will to deploy on the edge

Type of work

- 20% Literature study

- 60% Software and/or Hardware design

- 20% Measurements and validation

Status: Available

- Type: Semester or Master Thesis (multiple students possible)

- Professor: : Prof. Dr. Luca Benini

- Supervisors:

Julian Moosmann

|

Philipp Mayer

|

- Currently involved students:

- None