Extending our FPU with Internal High-Precision Accumulation (M)

From iis-projects

Contents

Overview

Status: Available

- Type: Master Thesis

- Professor: Prof. Dr. L. Benini

- Supervisors:

Introduction

Low-precision floating-point (FP) formats are getting more and more traction in the context of neural network (NN) training. Employing low-precision formats, such as 8-bit FP data types, reduces the model's memory footprint and opens new opportunities to increase the system's energy efficiency. For these reasons, many commercial platforms already provide support for 8-bit FP data types. These formats only provide few mantissa bits and are, therefore, not suited for accumulation. They are instead used in mixed-precision operations, where the accumulation is performed in higher precision, e.g., by using FP16 or FP32.

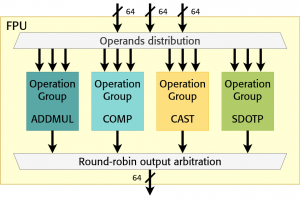

FP unit (FPU) developed at IIS [1], [2] already provide hardware support for low-precision FP formats (down to 8 bits). The goal of this project is to add support for internal high-precision accumulation in the FPU. In this way, the accumulated value does not have to be written and read to/from the FP register file at every accumulation, thus requiring low energy. At the same time, this decouples the accumulator size from the register file entry size. The internal accumulators can then have a custom size, potentially even larger than what is offered by one register file entry.

Character

- 20% Literature / architecture review

- 40% RTL implementation

- 40% Evaluation

Prerequisites

- Strong interest in computer architecture

- Experience with digital design in SystemVerilog as taught in VLSI I

- Experience with ASIC implementation flow (synthesis) as taught in VLSI II

References

[1] https://arxiv.org/abs/2207.03192 MiniFloat-NN and ExSdotp: An ISA Extension and a Modular Open Hardware Unit for Low-Precision Training on RISC-V cores