FFT-based Convolutional Network Accelerator

From iis-projects

Contents

Short Description

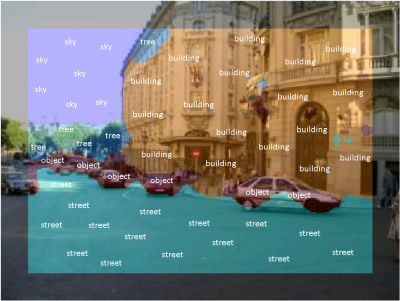

Imaging sensor networks, UAVs, smartphones, and other embedded computer vision systems require power-efficient, low-cost and high-speed implementations of synthetic vision systems capable of recognizing and classifying objects in a scene. Many popular algorithms in this area require the evaluations of multiple layers of filter banks. Almost all state-of-the-art synthetic vision systems are based on features extracted using multi-layer convolutional networks (ConvNets). When evaluating ConvNets, most of the time is spent performing the convolutions (80% to 90%). Existing accelerators do most of the work in spatial domain. The focus of this work is on speeding up this step by creating an ASIC or FPGA accelerator to perform this step faster and more power-efficiently in the frequency domain.

Status: Completed

- Christian Kuhn

- Supervision: Lukas Cavigelli

- Date: autumn semester 2015

Prerequisites

- Knowledge of Matlab

- Interest in computer vision, signal processing and VLSI design

- VLSI 1

- If you want the ASIC to be manufactured, enrolment in VLSI 2 is required and at least one student has to test the chip as part of the VLSI 3 lecture.

Character

- 20% Theory / Literature Research

- 60% VLSI Architecture, Implementation & Verification

- 20% VLSI back-end Design

Professor

Detailed Task Description

Goals

The goals of this project are

- for the students to get to know the ASIC design flow from specification through architecture exploration to implementation, functional verification, back-end design and silicon testing.

- to explore various architectures to perform the 2D convolutions used in convolutional networks energy-efficienctly in frequency domain, considering the constraints of an ASIC design, and performing fixed-point analyses for the most viable architecture(s)

Important Steps

- Do some first project planning. Create a time schedule and set some milestones based on what you have learned as part of the VLSI lectures.

- Get to understand the basic concepts of convolutional networks. Create a Matlab model of the problem at hand.

- Catch up on relevant previous work, in particular the papers we give to you.

- Become aware of the possibilities and limitations of the used technology; make some very rough estimates of area and timing. Also consider setting some target specifications for your chip.

- Come up with and evaluate/discuss several possible architectures (architecture exploration), implement the datapath/most resource relevant parts to get some first impression of the most promissing architecture(s). Also give some first thoughts to testability.

- Run detailed fixed-point analyses to determine the signal width in all parts of the data path.

- Create high quality, synthesizable VHDL code for your circuit. Please respect the lab's coding guidelines and continuously verify proper functionality of the individual parts of your design.

- Implement the necessary configuration interface, ...

- Perform thorough functional verification. This is very important.

- Take your final implementation through the backend design process.

- Write a project report. Include all major decisions taken during the design process and argue your choice. Include everything that deviates from the very standard case -- show off everything that took time to figure out and all your ideas that have influenced the project.

Be aware, that these steps cannot always be performed one after the other and often need some initial guesses followed by several iterations. Please use the supplied svn repository for your VHDL files and maybe even your notes, presentation, and the final report (you can check out the code on any device, collaborate more easily and intensively, keep track of changes to the code, have a backup of every version, ...).

Meetings & Presentations

The students and advisor(s) agree on weekly meetings to discuss all relevant decisions and decide on how to proceed. Of course, additional meetings can be organized to address urgent issues.

Around the middle of the project there is a design review, where senior members of the lab review your work (bring all the relevant information, such as prelim. specifications, block diagrams, synthesis reports, testing strategy, ...) to make sure everything is on track and decide whether further support is necessary. They also make the definite decision on whether the chip is actually manufactured (no reason to worry, if the project is on track) and whether more chip area, a different package, ... is provided. For more details confer to [1].

At the end of the project, you have to present/defend your work during a 15 min. presentation and 5 min. of discussion as part of the IIS colloquium.

Deliverables

- description of the most promising architectures, and argumentation on the decision taken (as part of the report)

- synthesizable, verified VHDL code

- generated test vector files

- synthesis scripts & relevant software models developed for verification

- synthesis results and final chip layout (GDS II data), bonding diagram

- datasheet (part of report)

- presentation slides

- project report (in digital form; a hard copy also welcome, but not necessary)

Timeline

To give some idea on how the time can be split up, we provide some possible partitioning:

- Literature survey, building a basic understanding of the problem at hand, catch up on related work (2 week)

- Architecture design & evaluation (2-3 weeks)

- Fixed-point model, implementation loss, test environment (1-2 weeks)

- HDL implementation, simulation, debugging (3 weeks)

- Synthesis/Backend (2 weeks)

- Report and presentation (2-3 weeks)

Literature

- FFT-related: [2], [3]

- Hardware Acceleration of Convolutional Networks:

- C. Farabet, B. Martini, B. Corda, P. Akselrod, E. Culurciello and Y. LeCun, "NeuFlow: A Runtime Reconfigurable Dataflow Processor for Vision", Proc. IEEE ECV'11@CVPR'11 [4]

- V. Gokhale, J. Jin, A. Dundar, B. Martini and E. Culurciello, "A 240 G-ops/s Mobile Coprocessor for Deep Neural Networks", Proc. IEEE CVPRW'14 [5]

- [6]

- two not-yet-published papers by our group on acceleration of ConvNets