Difference between revisions of "High Performance SoCs"

From iis-projects

m (→Available Projects) |

(Ordered users by alphabetical order and added Cykoenig) |

||

| (13 intermediate revisions by 6 users not shown) | |||

| Line 41: | Line 41: | ||

==Who are we== | ==Who are we== | ||

| + | <!----------Benz----------> | ||

{| | {| | ||

| − | | style="padding: 10px" | [[File: | + | | style="padding: 10px" | [[File:Tbenz_face_pulp_team.jpg|frameless|left|96px]] |

| + | | | ||

| + | ===[[:User:Tbenz | Thomas Benz]]=== | ||

| + | * '''e-mail''': [mailto:tbenz@iis.ee.ethz.ch tbenz@iis.ee.ethz.ch] | ||

| + | * '''phone''': +41 44 632 05 18 | ||

| + | * '''office''': ETZ J85 | ||

| + | |} | ||

| + | |||

| + | <!----------Bertaccini----------> | ||

| + | {| | ||

| + | | style="padding: 10px" | [[File:lbertaccini_photo.jpg|frameless|left|96px]] | ||

| | | | ||

| − | ===[[:User: | + | ===[[:User:Lbertaccini | Luca Bertaccini]]=== |

| − | * '''e-mail''': [mailto: | + | * '''e-mail''': [mailto:lbertaccini@iis.ee.ethz.ch lbertaccini@iis.ee.ethz.ch] |

| − | * '''phone''': +41 44 632 | + | * '''phone''': +41 44 632 55 58 |

| − | * '''office''': ETZ | + | * '''office''': ETZ J78 |

|} | |} | ||

| + | <!----------Bertuletti----------> | ||

{| | {| | ||

| − | | style="padding: 10px" | [[File: | + | | style="padding: 10px" | [[File: Mbertuletti_squaredpicture.png|frameless|left|96px]] |

| | | | ||

| − | ===[[:User: | + | |

| − | * '''e-mail''': [mailto: | + | ===[[:User:Mbertuletti| Marco Bertuletti]]=== |

| − | + | * '''e-mail''': [mailto:mbertuletti@iis.ee.ethz.ch mbertuletti@iis.ee.ethz.ch] | |

| − | * '''office''': ETZ | + | * '''office''': ETZ J69.2 |

|} | |} | ||

| + | <!----------Collagrande----------> | ||

{| | {| | ||

| − | | style="padding: 10px" | [[File: | + | | style="padding: 10px" | [[File:Colluca picture.png|frameless|left|96px]] |

| | | | ||

| − | ===[[:User: | + | |

| − | * '''e-mail''': [mailto: | + | ===[[:User:Colluca| Luca Colagrande]]=== |

| − | + | * '''e-mail''': [mailto:colluca@iis.ee.ethz.ch colluca@iis.ee.ethz.ch] | |

| − | * '''office''': | + | * '''office''': OAT U21 |

|} | |} | ||

| + | <!----------Fischer----------> | ||

{| | {| | ||

| − | | style="padding: 10px" | [[File: | + | | style="padding: 10px" | [[File:Tim_Fischer.jpeg|frameless|left|96px]] |

| | | | ||

| − | ===[[:User: | + | |

| − | * '''e-mail''': [mailto: | + | ===[[:User:Fischeti| Tim Fischer]]=== |

| − | * '''phone''': +41 44 632 | + | * '''e-mail''': [mailto:fischeti@iis.ee.ethz.ch fischeti@iis.ee.ethz.ch] |

| − | * '''office''': ETZ | + | * '''phone''': +41 44 632 59 12 |

| + | * '''office''': ETZ J76.2 | ||

|} | |} | ||

| + | <!----------Garofalo----------> | ||

{| | {| | ||

| − | | style="padding: 10px" | [[File: | + | | style="padding: 10px" | [[File: Agarofalo_new.jpeg|frameless|left|96px]] |

| | | | ||

| − | ===[[:User: | + | ===[[:User:Agarofalo| Angelo Garofalo]]=== |

| − | * '''e-mail''': [mailto: | + | * '''e-mail''': [mailto:agarofalo@iis.ee.ethz.ch agarofalo@iis.ee.ethz.ch] |

| − | |||

* '''office''': ETZ J78 | * '''office''': ETZ J78 | ||

|} | |} | ||

| + | <!----------Koenig----------> | ||

| + | {| | ||

| + | | style="padding: 10px" | [[File: Cykoenig_face_pulp_team.png|frameless|left|96px]] | ||

| + | | | ||

| + | ===[[:User:Cykoenig| Cyril Koenig]]=== | ||

| + | * '''e-mail''': [mailto:cykoenig@iis.ee.ethz.ch cykoenig@iis.ee.ethz.ch] | ||

| + | * '''office''': ETZ J76.2 | ||

| + | |} | ||

| + | |||

| + | <!----------Mazzola----------> | ||

| + | {| | ||

| + | | style="padding: 10px" | [[File:Smazzola_face_1to1.png|frameless|left|96px]] | ||

| + | | | ||

| + | ===[[:User:Smazzola | Sergio Mazzola]]=== | ||

| + | * '''e-mail''': [mailto:smazzola@iis.ee.ethz.ch smazzola@iis.ee.ethz.ch] | ||

| + | * '''phone''': +41 44 632 81 49 | ||

| + | * '''office''': ETZ J76.2 | ||

| + | |} | ||

| + | |||

| + | <!----------Perotti----------> | ||

{| | {| | ||

| style="padding: 10px" | [[File:Mperotti_face_pulp_team.jpg|frameless|left|96px]] | | style="padding: 10px" | [[File:Mperotti_face_pulp_team.jpg|frameless|left|96px]] | ||

| Line 92: | Line 127: | ||

* '''e-mail''': [mailto:mperotti@iis.ee.ethz.ch mperotti@iis.ee.ethz.ch] | * '''e-mail''': [mailto:mperotti@iis.ee.ethz.ch mperotti@iis.ee.ethz.ch] | ||

* '''phone''': +41 44 632 05 25 | * '''phone''': +41 44 632 05 25 | ||

| − | * '''office''': | + | * '''office''': OAT U21 |

|} | |} | ||

| + | <!----------Riedel----------> | ||

{| | {| | ||

| style="padding: 10px" | [[File:Sriedel_face_pulp_team.jpg|frameless|left|96px]] | | style="padding: 10px" | [[File:Sriedel_face_pulp_team.jpg|frameless|left|96px]] | ||

| | | | ||

| + | |||

===[[:User:Sriedel | Samuel Riedel]]=== | ===[[:User:Sriedel | Samuel Riedel]]=== | ||

* '''e-mail''': [mailto:sriedel@iis.ee.ethz.ch sriedel@iis.ee.ethz.ch] | * '''e-mail''': [mailto:sriedel@iis.ee.ethz.ch sriedel@iis.ee.ethz.ch] | ||

| Line 104: | Line 141: | ||

|} | |} | ||

| + | <!----------Scheffler----------> | ||

{| | {| | ||

| − | | style="padding: 10px" | [[File: | + | | style="padding: 10px" | [[File:Paulsc_face_1to1.png|frameless|left|96px]] |

| + | | | ||

| + | ===[[:User:Paulsc | Paul Scheffler]]=== | ||

| + | * '''e-mail''': [mailto:paulsc@iis.ee.ethz.ch paulsc@iis.ee.ethz.ch] | ||

| + | * '''phone''': +41 44 632 09 15 | ||

| + | * '''office''': ETZ J85 | ||

| + | |} | ||

| + | |||

| + | <!----------Wistoff----------> | ||

| + | {| | ||

| + | | style="padding: 10px" | [[File:Nwistoff_face_pulp_team.JPG|frameless|left|96px]] | ||

| | | | ||

| − | ===[[:User: | + | ===[[:User:Nwistoff | Nils Wistoff]]=== |

| − | * '''e-mail''': [mailto: | + | * '''e-mail''': [mailto:nwistoff@iis.ee.ethz.ch nwistoff@iis.ee.ethz.ch] |

| − | * '''phone''': +41 44 632 | + | * '''phone''': +41 44 632 06 75 |

| − | * '''office''': ETZ | + | * '''office''': ETZ J85 |

|} | |} | ||

| + | <!----------Zhang----------> | ||

{| | {| | ||

| − | | style="padding: 10px" | [[File: | + | | style="padding: 10px" | [[File: Yichao_Photo.jpeg|frameless|left|96px]] |

| | | | ||

| − | ===[[:User: | + | ===[[:User:Yiczhang| Yichao Zhang]]=== |

| − | * '''e-mail''': [mailto: | + | * '''e-mail''': [mailto:yiczhang@iis.ee.ethz.ch yiczhang@iis.ee.ethz.ch] |

| − | |||

* '''office''': ETZ J76.2 | * '''office''': ETZ J76.2 | ||

|} | |} | ||

| + | <!----------Retired members----------> | ||

<!--Retired members | <!--Retired members | ||

{| | {| | ||

| Line 148: | Line 197: | ||

* '''phone''': +41 44 632 67 89 | * '''phone''': +41 44 632 67 89 | ||

* '''office''': ETZ J89 | * '''office''': ETZ J89 | ||

| + | |} | ||

| + | {| | ||

| + | | style="padding: 10px" | [[File:Matheusd_face_1to1.png|frameless|left|96px]] | ||

| + | | | ||

| + | ===[[:User:Matheusd | Matheus Cavalcante]]=== | ||

| + | * '''e-mail''': [mailto:matheusd@iis.ee.ethz.ch matheusd@iis.ee.ethz.ch] | ||

| + | * '''phone''': +41 44 632 54 96 | ||

| + | * '''office''': ETZ J69.2 | ||

|} | |} | ||

--> | --> | ||

Latest revision as of 10:54, 25 January 2024

High-Performance Systems-on-Chip

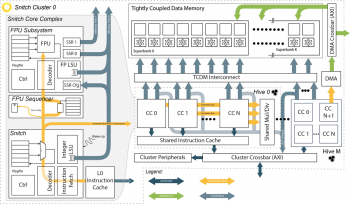

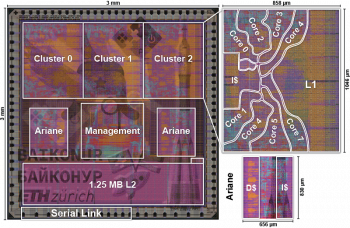

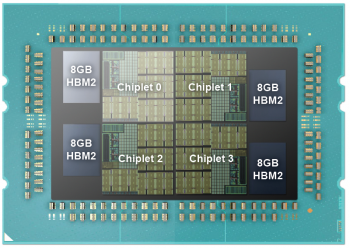

Today, a multitude of data-driven applications such as machine learning, scientific computing, and big data demand an ever-increasing amount of parallel floating-point performance from computing systems. Increasingly, such applications must scale across a wide range of applications and energy budgets, from supercomputers simulating next week's weather to your smartphone cameras correcting for low light conditions.

This brings challenges on multiple fronts:

- Energy Efficiency becomes a major concern: As logic density increases, supplying these systems with energy and managing their heat dissipation requires increasingly complex solutions.

- Memory bandwidth and latency become a major bottleneck as the amount of processed data increases. Despite continuous advances, memory lags behind computing in scaling, and many data-driven problems today are memory-bound.

- Parallelization and scaling bring challenges of their own: on-chip interconnects may introduce significant area and performance overheads as they grow, and both the data and instruction streams of cores may compete for valuable memory bandwidth and interfere in a destructive way.

While all state-of-the-art high-performance computing systems are constrained by the above issues, they are also subject to a fundamental trade-off between efficiency and flexibility. This forms a design space which includes the following paradigms:

- Accelerators are designed to do one thing very well: they are very energy efficient and performant and usually offer predetermined data movement. However, they are not or barely programmable, inflexible, and monolithic in their design.

- Superscalar Out-of-Order CPUs, on the other end, provide extreme flexibility, full programmability, and reasonable performance across various workloads. However, they require large area and energy overheads for a given performance, use memory inefficiently, and are often hard to scale well to manycore systems.

- GPUs are parallel and data-oriented by design, yet still meaningfully programmable, aiming for a sweet-spot between scalability, efficiency, and programmability. However, are still subject to memory access challenges and often require manual memory management for decent performance.

How can we further improve on these existing paradigms? Can we design decently efficient and performant, yet freely programmable systems with scalable, performant memory systems?

If these questions sound intriguing to you, consider joining us for a project or thesis! You can find currently available projects and our contact information below.

Our Activities

We are primarily interested in architecture design and hardware implementation for high-performance systems. However, ensuring high performance requires us to consider the entire hardware-software stack:

- HPC Software: Design and porting of high-performance applications, benchmarks, compiler tools, and operating systems (Linux) to our hardware.

- Hardware-software codesign: Design of performance-aware algorithms and kernels and hardware that can be efficiently programmed for use in processor-based systems.

- Architecture: RTL implementation of energy-efficient designs with an emphasis on high utilization and throughput, as well as on efficient interoperability with existing IPs.

- SoC design and Implementation: Design of full high-performance systems-on-chips; implementation and tapeout on modern silicon technologies such as TSMC's 65 nm and GlobalFoundries' 22 nm nodes.

- IC testing and Board-Level design: Testing of the returning chips with industry-grade automated test equipment (ATE) and design of system-level demonstrator boards.

Our current interests include systems with low control-to-compute ratios, high-performance on-chip interconnects, and scalable many-core systems. However, we are always happy to explore new domains; if you have an interesting idea, contact us and we can discuss it in detail!

Who are we

Thomas Benz

|

Luca Bertaccini

|

Marco Bertuletti

|

Luca Colagrande

|

Tim Fischer

|

Angelo Garofalo

|

Cyril Koenig

|

Sergio Mazzola

|

Matteo Perotti

|

Samuel Riedel

|

Paul Scheffler

|

Nils Wistoff

|

Yichao Zhang

|

Projects

All projects are annotated with one or more possible project types (M/S/B/G) and a number of students (1 to 3).

- M: Master's thesis: 26 weeks full-time (6 months) for one student only

- S: Semester project: 14 weeks half-time (1 semester lecture period) or 7 weeks full-time for 1-3 students

- B: Bachelor's thesis: 14 weeks half-time (1 semester lecture period) for one student only

- G: Group project: 14 weeks part-time (1 semester lecture period) for 2-3 students

Usually, these are merely suggestions from our side; proposals can often be reformulated to fit students' needs.

Available Projects

- Accelerating Stencil Workloads on Snitch using ISSRs (1-2S/B)

- Advanced Physical Design: Reinforcement Learning for Macro Placement and Mix-Placer (B/1-2S)

- All the flavours of FFT on MemPool (1-2S/B)

- An Efficient Compiler Backend for Snitch (1S/B)

- Approximate Matrix Multiplication based Hardware Accelerator to achieve the next 10x in Energy Efficiency: Full System Intregration

- Benchmarking a heterogeneous 217-core MPSoC on HPC applications (M/1-3S)

- Benchmarking a RISC-V-based Server on LLMs/Foundation Models (SA or MA)

- Benchmarking RISC-V-based Accelerator Cards for Inference (multiple SA)

- Creating an At-memory Low-overhead Bufferless Matrix Transposition Accelerator (1-3S/B)

- Cycle-Accurate Event-Based Simulation of Snitch Core

- Design of a Reconfigurable Vector Processor Cluster for Area Efficient Radar Processing (1M)

- Energy Efficient AXI Interface to Serial Link Physical Layer

- Evaluating The Use of Snitch In The PsPIN RISC-V In-network Accelerator (M)

- Extending our FPU with Internal High-Precision Accumulation (M)

- Extending the HERO RISC-V HPC stack to support multiple devices on heterogeneous SoCs (M/1-3S)

- Fast Simulation of Manycore Systems (1S)

- Finalizing and Releasing Our Open-source AXI4 IPs (1-3S/B/2-3G)

- Hardware Exploration of Shared-Exponent MiniFloats (M)

- Implementation of a Coherent Application-Class Multicore System (1-2S)

- IP-Based SoC Generation and Configuration (1-3S/B)

- Routing 1000s of wires in Network-on-Chips (1-2S/M)

- Scaleout Study on Interleaved Memory Transfers in Huge Manycore Systems with Multiple HBM Channels (M/1-3S)

- Serverless Benchmarks on RISC-V (M)

- Towards a High-performance Open-source Verification Suite for AXI-based Systems (M/1-3S/B)

- Towards Formal Verification of the iDMA Engine (1-3S/B)

- Vector-based Parallel Programming Optimization of Communication Algorithm (1-2S/B)

Projects In Progress

- A RISC-V fault-tolerant many-core accelerator for 5G Non-Terrestrial Networks (1-2S/B)

- A RISC-V ISA Extension for Pseudo Dual-Issue Monte Carlo in Snitch (1M/2S)

- A RISC-V ISA Extension for Scalar Chaining in Snitch (M)

- Accelerating Matrix Multiplication on a 216-core MPSoC (1M)

- Big Data Analytics Benchmarks for Ara

- Coherence-Capable Write-Back L1 Data Cache for Ariane (M)

- Creating a Free and Open-Source Verification Environment for Our New DMA Engine (1-3S/B)

- Creating A Technology-independent USB1.0 Host Implementation Targetting ASICSs (1-3S/B)

- Designing a Scalable Miniature I/O DMA (1-2B/1-3S/M)

- Efficient collective communications in FlooNoC (1M)

- Fault-Tolerant Floating-Point Units (M)

- GDBTrace: A Post-Simulation Trace-Based RISC-V GDB Debugging Server (1S)

- Runtime partitioning of L1 memory in Mempool (M)

- Vector-based Manycore HPC Cluster Exploration for 5G Communication Algorithm (1-2M)

Completed Projects

- A reduction-capable AXI XBAR for fast M-to-1 communication (1M)

- Integrating an Open-Source Double-Precision Floating-Point DivSqrt Unit into CVFPU (1S)

- Spatz grows wings: Physical Implementation of a Vector-Powered Manycore System (2S)

- Design and Implementation of a Fully-digital Platform-independent Integrated Temperature Sensor Enabling DVFS in Open-source Tapeouts (1-3S/B)

- Design of a Scalable High-Performance and Low-Power Interface Based on the I3C Protocol (1-3S/B)

- Investigating the Cost of Special-Case Handling in Low-Precision Floating-Point Dot Product Units (1S)

- Efficient Execution of Transformers in RISC-V Vector Machines with Custom HW acceleration (M)

- Modeling High Bandwidth Memory for Rapid Design Space Exploration (1-3S/B)

- Improving SystemVerilog Support for Free And Open-Source EDA Tools (1-3S/B)

- Enhancing Our DMA Engine With Virtual Memory (M/1-3S/B)

- Extending Our DMA Architecture with SiFives TileLink Protocol (1-3S/B)

- Extension and Evaluation of TinyDMA (1-2S/B/2-3G)

- Design of a CAN Interface to Enable Reliable Sensors-to-Processors Communication for Automotive-oriented Embedded Applications (1M)

- Design of an Energy-Efficient Ethernet Interface for Linux-capable Systems

- Fitting Power Consumption of an IP-based HLS Approach to Real Hardware (1-3S)

- Enabling Efficient Systolic Execution on MemPool (M)

- Benchmarking a heterogeneous 217-core MPSoC on HPC applications (M/1-3S)

- Creating a Compact Power Supply and Monitoring System for the Occamy Chip (1-3S/B/2-3G)

- A Flexible FPGA-Based Peripheral Platform Extending Linux-Capable Systems on Chip (1-3S/B)

- Developing a Transposition Unit to Accelerate ML Workloads (1-3S/B)

- Running Rust on PULP

- Optimizing the Pipeline in our Floating Point Architectures (1S)

- Design of a Prototype Chip with Interleaved Memory and Network-on-Chip

- Augmenting Our IPs with AXI Stream Extensions (M/1-2S)

- Implementing DSP Instructions in Banshee (1S)

- Streaming Integer Extensions for Snitch (M/1-2S)

- Efficient Synchronization of Manycore Systems (M/1S)

- A Unified Compute Kernel Library for Snitch (1-2S)

- Implementation of a Small and Energy-Efficient RISC-V-based Vector Accelerator (1M)

- Enhancing our DMA Engine with Vector Processing Capabilities (1-2S/B)

- Adding Linux Support to our DMA Engine (1-2S/B)

- Hypervisor Extension for Ariane (M)

- Efficient Memory Stream Handling in RISC-V-based Systems (M/1-2S)

- Transforming MemPool into a CGRA (M)

- Multi issue OoO Ariane Backend (M)

- Physical Implementation of Ara, PULP's Vector Machine (1-2S)

- Towards the Ariane Desktop: Display Output for Ariane on FPGA under Linux (S/B/G)

- Ottocore: A Minimal RISC-V Core Designed for Teaching (B/2G)

- LLVM and DaCe for Snitch (1-2S)

- Investigation of the high-performance multi-threaded OoO IBM A2O Core (1-3S)

- Bringup and Evaluation of an Energy-efficient Heterogeneous Manycore Compute Platform (1-2S)

- An RPC DRAM Implementation for Energy-Efficient ASICs (1-2S)

- A Snitch-Based SoC on iCE40 FPGAs (1-2S/B)

- Physical Implementation of MemPool, PULP's Manycore System (1M/1-2S)

- Manycore System on FPGA (M/S/G)

- A Flexible Peripheral System for High-Performance Systems on Chip (M)

- ISA extensions in the Snitch Processor for Signal Processing (M)

- A Snitch-based Compute Accelerator for HERO (M/1-2S)

- MemPool on HERO (1S)