Neural Architecture Search using Reinforcement Learning and Search Space Reduction

From iis-projects

Contents

Description

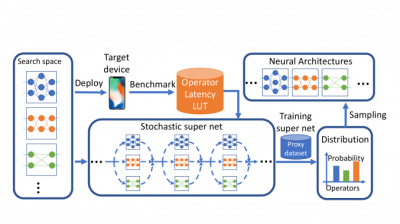

Designing good and efficient neural networks is challenging, most tasks require models to be both highly accurate and robust, as well as being compact. These models then also often have constraints on energy usage, memory consumption, and latency. This results in the search space for manual design in being combinatorially large. A method of tackling the problem of manual design is instead using a neural architecture search (NAS). Many different flavors of NAS exist, such as being differentiable or DNAS [1], NAS methods that utilize evolutionary algorithms, and NAS methods that use reinforcement learning [2]. An interesting and exciting feature of NAS is the ability to include hardware constraints or have constraints on e.g., power consumption and/or memory usage guide the search process for state-of-the-art neural networks [3].

In this project, the student will explore NAS-subspaces generated using a method developed at the lab, and utilize a reinforcement learning agent to find the optimal network within these subspaces. The student will then extend the subspace generating method to datasets (NAS Spaces) that he finds and utilize RL to find the best network suited for the task at hand.

Status: Available

- Supervision: Thorir Mar Ingolfsson, Matteo Spallanzani

Prerequisites

- Data science: clustering and dimensionality reduction

- Deep learning fundamentals: backpropagation, CNNs

- Programming: Python & PyTorch

Character

- 20% Theory

- 80% Implementation

Literature

- [1] Wu et. al., FBNet: Hardware-Aware Efficient ConvNet Design via Differentiable Neural Architecture Search

- [2] Tan et. al., MnasNet: Platform-Aware Neural Architecture Search for Mobile

- [3] Vineeth et al., Hardware Aware Neural Network Architectures (using FBNet)

Professor