Real-time View Synthesis using Image Domain Warping

From iis-projects

Date

Personnel

- Pierre Greisen

- Michael Schaffner

- Simon Heinzle

Funding

Partners

Summary

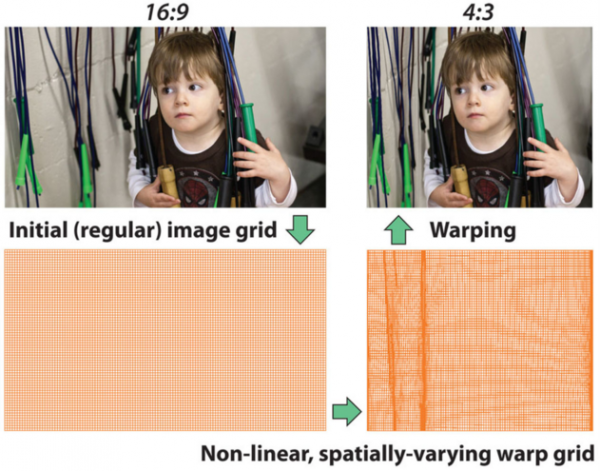

With the steadily increasing frame rates and resolutions, real-time video processing poses significant computational challenges. A recent application that is particularly demanding is video content adaptation: while a growing amount of content is watched on an increasing number of different mobile platforms, most productions are captured with one acquisition system at fixed parameters. Examples for content adaption algorithms are content-aware aspect ratio retargeting, non-linear stereoscopic 3D (S3D) adaption, and S3D to multi-view generation. Getting such view synthesis algorithms to run in real-time in mobile devices was the goal of this project.

The employed framework in this work is the image domain warping (IDW) pipeline. As a first step, an IDW view synthesis algorithm determines an image warping function that is dependent on the display characteristics. The input frames are then transformed to the output frames according to the given warping function using a warping algorithm. The generation of the warping function is application-specific, and is separated from the rendering. For instance, in video retargeting, the warping function retains the aspect ratio of salient (i.e., visually important) parts of the image, while the image distortion is hidden in visually less important regions. In S3D applications, the warping function is derived from the 3D structure of the scene to generate in-between views.

The primary focus of this work has been put on efficient algorithms and hardware architectures for real-time view synthesis using the IDW pipeline. Various hardware architectures have been designed and implemented therefore.

Publications

- P. Greisen, M. Schaffner, D. Luu, M. Val, S. Heinzle, F. Gürkaynak, "Spatially-Varying Image Warping: Evaluations and VLSI Implementations", VLSI-SoC: From Algorithms to Circuits and System-on-Chip Design, volume 418 of IFIP Advances in Information and Communication Technology, pages 64-87. Springer Berlin Heidelberg, 2013

- P. Greisen, R. Emler, M. Schaffner, S. Heinzle, F. Gürkaynak, "A General-Transformation EWA View Rendering Engine for 1080p Video in 130nm CMOS", IFIP/IEEE 20th International Conference on Very Large Scale Integration (VLSI-SoC 2012), Santa Cruz, USA, 2012

- P. Greisen, M. Schaffner, S. Heinzle, M. Runo, A. Smolic, A. Burg, H. Kaeslin, M. Gross, "Analysis and VLSI Implementation of EWA Rendering for Real-Time HD Video Applications", IEEE Transactions on Circuits and Systems for Video Technology, vol. 22, no. 11, pp. 1577-1589, Nov 2012

- P. Greisen, M. Lang, S. Heinzle, A. Smolic, "Algorithm and VLSI Architecture for Real-Time 1080p60 Video Retargeting", High-Performance Graphics 2012, Paris, France, vol. 2012, pp. 57-66, Jun 2012