|

|

| (3 intermediate revisions by the same user not shown) |

| Line 2: |

Line 2: |

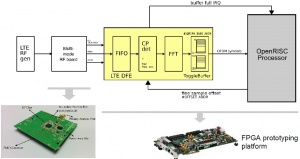

| | [[File:lteTestbed.jpg|thumb|Figure 2: LTE testbed with digital baseband and processor on an FPGA and RF-IC on the [[evaLTE]] FMC module.]] | | [[File:lteTestbed.jpg|thumb|Figure 2: LTE testbed with digital baseband and processor on an FPGA and RF-IC on the [[evaLTE]] FMC module.]] |

| | | | |

| − | ==Introduction== | + | ==Abstract== |

| − | Various estimates predict 20 to 30 billion embedded devices connected to the Internet in 2020 in what is called the the Internet of things (IoT). The IoT does not only take place in our homes, or in areas which are covered by wireless LANs and other low-range networks, but also in very remote places, deeply indoor or under the ground, which are only covered by cellular networks, and in some cases only by cellular networks with extended coverage.

| + | Narrowband IoT (NB-IoT) is a new cellular standard for Internet of Things (IoT) devices. An efficient and low power implementation of a NB-IoT receiver is crucial to allow battery powered operation of the device over several years. Initial timing acquisition is an essential part which has to be done at the lowest possible latency to reach a high efficiency, as the RF part of the receiver dominates overall power consumption and has to be turned on during cell search. A high complexity baseband algorithm based on cross-correlations resulting in low latency is shown to be more efficient than a low complexity, high latency auto-correlation approach. |

| | | | |

| − | Connectivity is crucial for IoT applications. Cellular network coverage is available nearly everywhere in the world and does not depend on custom proprietary infrastructure. Devices connected to the cellular IoT are not dependent on IT infrastructure such as WIFI access points. Hence, they can be deployed more easily, since no changes in the IT infrastructure is required and no third party equipment needs to be integrated. To realize the IoT, cellular standards are currently released to meet the requirements regarding coverage extension, low-power and low-cost of IoT components, especially on the device side.

| + | In this thesis, an implementation of a ML cross-correlation NPSS detection algorithm is presented. Its efficient implementation allows to reduce the required energy per timing synchronization by up to 34 % compared to an auto-correlation algorithm. Additionally, simulations of the NPBCH and needed channel estimation and equalization have been conducted to find the optimal methods to be used in Narrowband IoT (NB-IoT). |

| | | | |

| − | Currently numerous companies are standardizing cellular standards within the 3GPP. Some standards are focusing on evolution of 2G networks while others are partly compatible with LTE networks. Within LTE two device category M are currently being standardized [1], Cat-M1 and Cat-M2. Cat-M1 has a bandwidth of 1.4 MHz while Cat-M2 has a bandwidth of 200 kHz (Narrowband LTE, former NB-IoT). Key features of Cat-M devices are blind repetitions to achieve 7-fold coverage extension in terms of range and long sleep cycles for extended battery life. Extended coverage and extended battery lifetime are crucial factors for the success of cellular IoT which presents stiff challenges for low cost and miniaturized realization. In this project key algorithms of a LTE Cat-M receiver shall be implemented on an FPGA testbed such that extended coverage can be achieved at maximum energy efficiency while compensating impairments arising from the mobile radio channel and the RF-IC.

| + | ===Status: Completed=== |

| − | | + | : Student: msc16f3 |

| − | ==Project Description==

| |

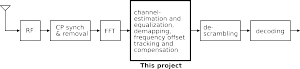

| − | The overall goal of this project is to develop a system which demonstrates the extended coverage (EC) capabaility of the novel LTE IoT device category M (LTE Cat-M). A repetition exploiting channel estimator, channel equalizer and demapper shall be implemented. During the project existing blocks along the receive chain can be used and interconnected as shown in Figure 1.

| |

| − | | |

| − | The implementations shall be done on the FPGA testbed shown in Figure 2 which already includes the receiver blocks up to (and including) the FFT. The testbed also includes an Open-RISC processor and the corresponding software (compiler tool-chain, RF-IC drivers etc.) which can be used in this project. The downlink baseband processing is distributed over the processor

| |

| − | and the yellow box. In the yellow box, the baseband processing blocks which are implemented in dedicated VLSI design are contained.

| |

| − | | |

| − | Before working on the FPGA testbed, the existing LTE Matlab simulation framework LTESim has to be extended to support Cat-M capability. The Matlab implementation of the aimed algorithms will help to perform the HW/SW partitioning and deliver a golden model which will be used to verify the hardware implementations. The results of the HW/SW partitioning in the second work-package will help to decide on the hardware-software partitioning of the algorithms. For some algorithms (or parts thereof) a dedicated hardware implementation (RTL/VLSI design) might be favorable, while for others a software implementation might be better.

| |

| − | | |

| − | The dedicated hardware implementations shall be connected to the processor via a peripheral bus (AMBA/AXI) as accelerators. The communication between the processor and the accelerators is done with register read/write operations and via interrupts. In this project focus is put on the following baseband algorithms:

| |

| − | * Channel estimation, equalization and demapping

| |

| − | * Repetition combining

| |

| − | * Frequency offset estimation and tracking

| |

| − | | |

| − | Frequency offset estimation and tracking are of special interest in this project because of the EC. EC is achieved via multiple blind repetitions which are combined in the receiver to increase the signal-to-noise ratio (SNR). However between two consecutive repetitions a long idle time can occur, during which the frequency offset of the RF-transceiver will drift. This drift needs to be estimated and compensated for the repetition combining. The estimation can be done via the reference symbols.

| |

| − | | |

| − | ===Status: In Progress === | |

| − | : Student: Samuel Willi (msc16f3) | |

| | : Supervision: [[:User:Weberbe|Benjamin Weber]], [[:User:Kroell|Harald Kröll]], [[:User:Mkorb|Matthias Korb]] | | : Supervision: [[:User:Weberbe|Benjamin Weber]], [[:User:Kroell|Harald Kröll]], [[:User:Mkorb|Matthias Korb]] |

| | | | |

| | ===Professor=== | | ===Professor=== |

| − | : [http://www.iis.ee.ethz.ch/portrait/staff/huang.en.html Qiuting Huang]

| + | [http://www.iis.ee.ethz.ch/people/person-detail.html?persid=78758 Qiuting Huang] |

| − | | |

| − | ==References==

| |

| − | [1] Nokia Networks. LTE-M Optimizing LTE for the Internet of Things. http://networks.nokia.com/sites/default/files/document/nokia_lte-m_-_optimizing_lte_for_the_internet_of_things_white_paper.pdf, 2015.

| |

| | | | |

| | [[Category:Digital]] | | [[Category:Digital]] |

| − | [[Category:In progress]] | + | [[Category:Completed]] |

| | + | [[Category:2016]] |

| | [[Category:Master Thesis]] | | [[Category:Master Thesis]] |

| | [[Category:FPGA]] | | [[Category:FPGA]] |

Narrowband IoT (NB-IoT) is a new cellular standard for Internet of Things (IoT) devices. An efficient and low power implementation of a NB-IoT receiver is crucial to allow battery powered operation of the device over several years. Initial timing acquisition is an essential part which has to be done at the lowest possible latency to reach a high efficiency, as the RF part of the receiver dominates overall power consumption and has to be turned on during cell search. A high complexity baseband algorithm based on cross-correlations resulting in low latency is shown to be more efficient than a low complexity, high latency auto-correlation approach.

In this thesis, an implementation of a ML cross-correlation NPSS detection algorithm is presented. Its efficient implementation allows to reduce the required energy per timing synchronization by up to 34 % compared to an auto-correlation algorithm. Additionally, simulations of the NPBCH and needed channel estimation and equalization have been conducted to find the optimal methods to be used in Narrowband IoT (NB-IoT).