Difference between revisions of "A RISC-V ISA Extension for Scalar Chaining in Snitch (M)"

From iis-projects

(→Status: Available) |

(→Project description) |

||

| (2 intermediate revisions by the same user not shown) | |||

| Line 7: | Line 7: | ||

[[Category:Hot]] | [[Category:Hot]] | ||

[[Category:Colluca]] | [[Category:Colluca]] | ||

| − | [[Category: | + | [[Category:In progress]] |

= Overview = | = Overview = | ||

| Line 24: | Line 24: | ||

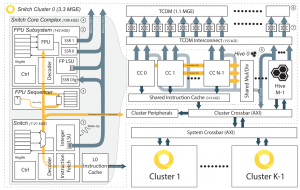

[[File:snitch_block_diagram.png|thumb|Figure 1: A block diagram of the Snitch cluster architecture]] | [[File:snitch_block_diagram.png|thumb|Figure 1: A block diagram of the Snitch cluster architecture]] | ||

| − | Suppose you have an expression of the form: A | + | Suppose you have an expression of the form: A = k * (B + C), where A, B and C are vectors of arbitrary length and k is a constant. In the following we will assume, for simplicity, that A, B and C are mapped to stream semantic registers (SSRs) [3], an ISA extension of the RISC-V Snitch core [1, 2], developed in our group. This expression would translate to the following sequence of instructions: |

<syntaxhighlight lang="asm"> | <syntaxhighlight lang="asm"> | ||

A0: FADD d, b, c | A0: FADD d, b, c | ||

| − | A1: | + | A1: FMUL a, k, d |

A2: FADD d, b, c | A2: FADD d, b, c | ||

| − | A3: | + | A3: FMUL a, k, d |

A4: FADD d, b, c | A4: FADD d, b, c | ||

| − | A5: | + | A5: FMUL a, k, d |

A6: FADD d, b, c | A6: FADD d, b, c | ||

| − | A7: | + | A7: FMUL a, k, d |

</syntaxhighlight> | </syntaxhighlight> | ||

| Line 46: | Line 46: | ||

B2: FADD d3, b, c | B2: FADD d3, b, c | ||

B3: FADD d4, b, c | B3: FADD d4, b, c | ||

| − | B4: | + | B4: FMUL a, k, d1 |

| − | B5: | + | B5: FMUL a, k, d2 |

| − | B6: | + | B6: FMUL a, k, d3 |

| − | B7: | + | B7: FMUL a, k, d4 |

</syntaxhighlight> | </syntaxhighlight> | ||

| Line 67: | Line 67: | ||

C2: FADD d, b, c | C2: FADD d, b, c | ||

C3: FADD d, b, c | C3: FADD d, b, c | ||

| − | C4: | + | C4: FMUL a, k, d |

| − | C5: | + | C5: FMUL a, k, d |

| − | C6: | + | C6: FMUL a, k, d |

| − | C7: | + | C7: FMUL a, k, d |

</syntaxhighlight> | </syntaxhighlight> | ||

Latest revision as of 18:58, 21 April 2024

Contents

Overview

Status: In progress

- Type: Semester thesis

- Professor: Prof. Dr. L. Benini

- Supervisors:

- Student: Jayanth Jonnalagadda jjonnalagadd@student.ethz.ch

Introduction

Suppose you have an expression of the form: A = k * (B + C), where A, B and C are vectors of arbitrary length and k is a constant. In the following we will assume, for simplicity, that A, B and C are mapped to stream semantic registers (SSRs) [3], an ISA extension of the RISC-V Snitch core [1, 2], developed in our group. This expression would translate to the following sequence of instructions:

A0: FADD d, b, c

A1: FMUL a, k, d

A2: FADD d, b, c

A3: FMUL a, k, d

A4: FADD d, b, c

A5: FMUL a, k, d

A6: FADD d, b, c

A7: FMUL a, k, d

In processors targeting operating frequencies >1GHz the FP ALU is typically pipelined, so an instruction may take multiple cycles to execute. In our single-issue in-order RISC-V Snitch core, both instructions above take 4 cycles to complete and cannot overlap, due to the RAW dependency on the intermediate result D. A loop over the elements of the vector would incur in a stall of 4 cycles, on every iteration, significantly reducing the instructions-per-cycle (IPC) and the efficiency of the computation.

A well-known technique to optimize the above code is loop unrolling. By unrolling the loop and interleaving independent instructions, we can hide the RAW dependency stalls with useful instructions:

B0: FADD d1, b, c

B1: FADD d2, b, c

B2: FADD d3, b, c

B3: FADD d4, b, c

B4: FMUL a, k, d1

B5: FMUL a, k, d2

B6: FMUL a, k, d3

B7: FMUL a, k, d4

This optimization comes at the cost of increased register pressure (we need 8 registers instead of 5), which might require spilling registers to the stack, in turn decreasing the efficiency of the computation.

On a vector machine (with a large RF) the intermediate result D would be stored in one vector register, whereas here we are essentially building a vector register out of scalar architectural registers, which are a scarce resource. The vector architecture trades off area (and energy efficiency) for the performance improvement, by featuring a comparably large register file. On an out-of-order processor we could execute the original version of the program and still expect the performance of the unrolled version, as it can do register renaming. It trades off energy and area to perform the translation from architectural registers to physical registers.

Project description

With this project, we want to enable the execution of computations similar to the one in the example above, without increasing the register pressure, while still not incurring any stall, on an in-order processor. Your efforts will focus on the state-of-the-art RISC-V Snitch core developed in our group. Snitch is a pseudo dual-issue in-order processor, targeting energy-efficient floating-point computations. Snitch-based accelerators, as can be found in Occamy [4], aim to rival the energy-efficiency and performance of GPU accelerators. Therefore preserving the energy-efficiency of the core is key.

Your extension will leverage the following observations. To store the intermediate results (d1, d2, d3, d4) required to hide the latency of dependent instructions, the FP ALU's pipeline registers can be employed as physical registers in place of the four architectural registers. We could then rewrite the code as follows, to ensure that only one architectural register is used:

C0: FADD d, b, c

C1: FADD d, b, c

C2: FADD d, b, c

C3: FADD d, b, c

C4: FMUL a, k, d

C5: FMUL a, k, d

C6: FMUL a, k, d

C7: FMUL a, k, d

The remaining problem with this code is that instruction C1 would overwrite the result of C0, and so on. Therefore C4 would read value d4 in register d, instead of value d1 as required. This is because the writeback of C0 is expected to occur before that of C1. But if we allow the register file to exert backpressure on the adder, such that intermediate result d2 cannot override d1 until this is consumed from d, effectively postponing the writeback of C1, after the operand read of C4, the semantics of the original program are guaranteed. Of course this requires altering the semantics of the individual instructions, and some state register would have to be programmed in advance to communicate to the core that certain architectural registers have to adopt altered semantics.

These altered semantics are similar to the SSR semantics w.r.t. to the following properties:

- the value which is stored in an architectural register can only be consumed once

- when a value is consumed, it releases pressure on the upstream unit

In this sense, the architecture of the core becomes closer to a dataflow architecture [5], with the difference that the RF is always the medium between any two functional units and operand consumption is still explicitly triggered by issuing standard RISC-V instructions.

Detailed task description

To break it down in more detail, you will:

- Literature review and related work research

- Understand the architecture of the Snitch processor

- Understand the concept of stream semantic registers (SSRs)

- Review dataflow architecture concepts

- Research related works

- Develop the scalar chaining extension in Snitch

- Develop the RTL code to implement your extension

- Develop synthetic test programs to verify your implementation

- Evaluate your extension

- Synthesize the Snitch core complex and cluster to evaluate PPA impact of your extensions

- Develop a set of micro-kernels to evaluate the performance improvements thanks to your extension

- Future work

- Critically review the limitations of your extension

- What could be done in the future to improve your work?

Stretch goals

Additional optional stretch goals may include:

- Dataflow operation without the need to explicitly trigger operand consumption through instruction issuing

- Implement some of the proposed future work items you may come up with

Character

- 15% Literature/architecture review

- 40% RTL design and verification

- 35% Baremetal software development

- 10% Physical design evaluation

Prerequisites

- Strong interest in computer architecture

- Experience with digital design in SystemVerilog as taught in VLSI I

- Preferred: Experience in bare-metal or embedded C programming

- Preferred: Experience with ASIC implementation flow as taught in VLSI II

References

[1] Snitch paper

[2] Snitch Github repository

[3] Stream Semantic Registers (SSRs)

[4] Occamy

[5] Dataflow architecture