Difference between revisions of "Real-Time Stereo to Multiview Conversion"

From iis-projects

| (8 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

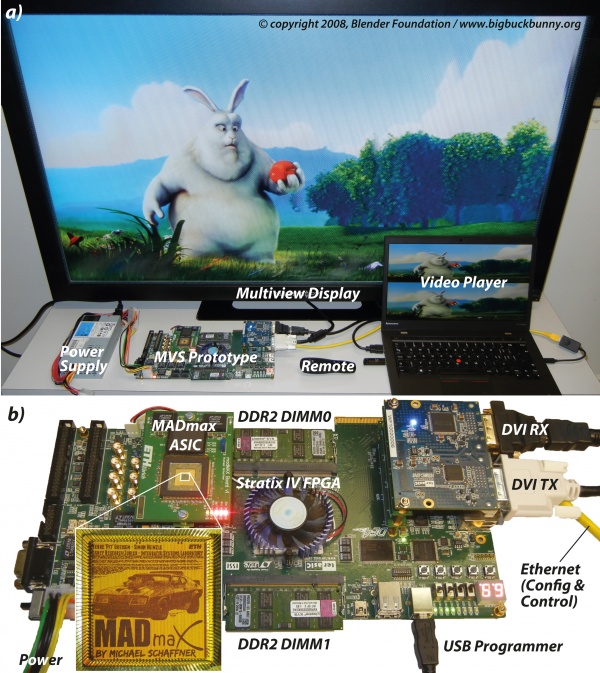

| − | + | [[File:mvSystem.jpg|thumb|600px|a) Multiview system in action and b) closeup of the hardware prototype.]] | |

| − | + | ||

| + | ===Overview=== | ||

| + | Today, most commercially available 3D display | ||

| + | systems require the viewers to wear some sort of shutter- or | ||

| + | polarization glasses, which is often regarded as inconvenience. | ||

| + | Ideally, a 3D display system should not require the users to | ||

| + | wear additional gear. In fact, the optimum would be a display | ||

| + | that replicates the original light-field of a scene. So-called | ||

| + | multiview autostereoscopic displays (MADs) represent a step | ||

| + | in this direction, as they are able to project several views of | ||

| + | a scene simultaneously, enabling a glasses-free 3D experience | ||

| + | and a limited motion parallax effect in horizontal direction. | ||

| + | However, appealing content creation for such displays is | ||

| + | a difficult task. Moreover, storage and transmission of high | ||

| + | definition content with more than two views is costly and | ||

| + | even infeasible in some cases. The fact that each MAD | ||

| + | model has different parameters (#views, #viewing angles, etc.) | ||

| + | exacerbates these problems. In order to bridge this contentdisplay | ||

| + | gap, so-called multiview synthesis (MVS) methods | ||

| + | have been developed over the past couple of years, which can | ||

| + | generate several virtual views from a small set of input views. | ||

| + | MVS is computationally intensive, yet it should run efficiently | ||

| + | in real-time and should be portable to end-user devices | ||

| + | to explore the full potential. In this work, we devise an efficient | ||

| + | hardware architecture of a complete, image-domain-warpingbased | ||

| + | MVS pipeline, which is able to synthesize content for | ||

| + | an 8-view full-HD display from full-HD S3D input at 30 fps. | ||

| + | Our hybrid FPGA/ASIC prototype is one of the first real-time | ||

| + | systems which is entirely implemented in hardware. Such a | ||

| + | dedicated hardware accelerator enables portable and energy | ||

| + | efficient MVS, which are both essential properties when | ||

| + | considering a deployment in consumer electronic devices. Our | ||

| + | system comprises all processing steps including S3D video | ||

| + | analysis, calculation of the warp transforms, rendering, and | ||

| + | anti-alias filtering/interleaving for the MAD. The algorithms | ||

| + | involved in these steps have all been revisited and jointly | ||

| + | optimized with their corresponding hardware architectures. | ||

| + | The developed hardware IP could be integrated into systemson- | ||

| + | chip (SoCs) for 3D TV sets or mobile devices, where it | ||

| + | would serve as a power-efficient hardware accelerator. | ||

| + | |||

| + | ===Video=== | ||

| + | : [https://www.youtube.com/watch?v=QpRAINhAjgc Watch the video on Youtube] | ||

===Partners=== | ===Partners=== | ||

| Line 6: | Line 48: | ||

===Contact=== | ===Contact=== | ||

| + | * [[:User:Schaffner|Michael Schaffner]] (schaffner(ät)iis.ee.ethz.ch), ETH Zürich | ||

* Dr. Aljoscha Smolic (smolic(ät)disneyresearch.com), Disney Research Zurich | * Dr. Aljoscha Smolic (smolic(ät)disneyresearch.com), Disney Research Zurich | ||

| − | * | + | * Prof. Luca Benini (benini(ät)iis.ee.ethz.ch), ETH Zürich |

==Related Projects== | ==Related Projects== | ||

| Line 24: | Line 67: | ||

[[#top|↑ top]] | [[#top|↑ top]] | ||

| − | [[Category: | + | [[Category:Image and Video Processing]] |

[[Category:Digital]] | [[Category:Digital]] | ||

[[Category:MAD]] | [[Category:MAD]] | ||

[[Category:Completed]] | [[Category:Completed]] | ||

| + | [[Category:2015]] | ||

[[Category:2014]] | [[Category:2014]] | ||

| + | [[Category:2013]] | ||

Latest revision as of 09:09, 23 October 2015

Contents

Overview

Today, most commercially available 3D display systems require the viewers to wear some sort of shutter- or polarization glasses, which is often regarded as inconvenience. Ideally, a 3D display system should not require the users to wear additional gear. In fact, the optimum would be a display that replicates the original light-field of a scene. So-called multiview autostereoscopic displays (MADs) represent a step in this direction, as they are able to project several views of a scene simultaneously, enabling a glasses-free 3D experience and a limited motion parallax effect in horizontal direction. However, appealing content creation for such displays is a difficult task. Moreover, storage and transmission of high definition content with more than two views is costly and even infeasible in some cases. The fact that each MAD model has different parameters (#views, #viewing angles, etc.) exacerbates these problems. In order to bridge this contentdisplay gap, so-called multiview synthesis (MVS) methods have been developed over the past couple of years, which can generate several virtual views from a small set of input views. MVS is computationally intensive, yet it should run efficiently in real-time and should be portable to end-user devices to explore the full potential. In this work, we devise an efficient hardware architecture of a complete, image-domain-warpingbased MVS pipeline, which is able to synthesize content for an 8-view full-HD display from full-HD S3D input at 30 fps. Our hybrid FPGA/ASIC prototype is one of the first real-time systems which is entirely implemented in hardware. Such a dedicated hardware accelerator enables portable and energy efficient MVS, which are both essential properties when considering a deployment in consumer electronic devices. Our system comprises all processing steps including S3D video analysis, calculation of the warp transforms, rendering, and anti-alias filtering/interleaving for the MAD. The algorithms involved in these steps have all been revisited and jointly optimized with their corresponding hardware architectures. The developed hardware IP could be integrated into systemson- chip (SoCs) for 3D TV sets or mobile devices, where it would serve as a power-efficient hardware accelerator.

Video

Partners

Contact

- Michael Schaffner (schaffner(ät)iis.ee.ethz.ch), ETH Zürich

- Dr. Aljoscha Smolic (smolic(ät)disneyresearch.com), Disney Research Zurich

- Prof. Luca Benini (benini(ät)iis.ee.ethz.ch), ETH Zürich

Related Projects

- Real-time View Synthesis using Image Domain Warping

- Real-Time Stereo to Multiview Conversion

- Feature Extraction with Binarized Descriptors: ASIC Implementation and FPGA Environment

- A Multiview Synthesis Core in 65 nm CMOS