Implementation of an Accelerator for Retentive Networks (1-2S)

From iis-projects

Contents

Overview

Status: Archived

- Type: Semester Thesis

- Professor: Prof. Dr. L. Benini

- Supervisors:

Introduction

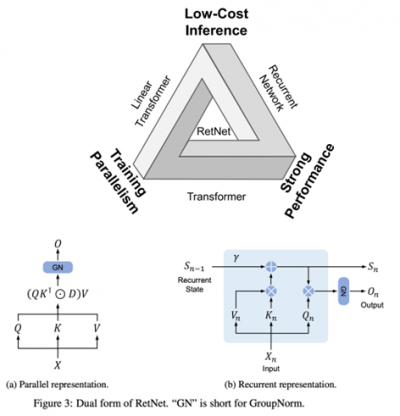

In the last few years, a new class of deep learning algorithm, the Transformer architecture [1], has emerged. Originally developed for natural language processing (NLP), Transformers are nowadays successfully applied to a wide range of problems such as text generation [2], text classification [3], image classification [4] and image generation [5], as well as audio processing [6]. However, the attention mechanism requires pair-wise computation between all elements of the input sequence, thus, scaling the number of operations quadratically with the sequence length. Additionally, the row-wise softmax function introduces complex data flow dependencies, hindering the ability to parallelize the training process. Retentive Networks [7] are a novel alternative to Transformer networks, achieving better training parallelism, low-cost inference and good performance. This is achieved by replacing the multi-head attention (MHA) with a multi-scale retention mechanism (MSR), replacing the softmax and enabling a parallel and recurrent formulation. The parallel representation empowers training parallelism to utilize GPU devices fully, whereas the recurrent representation enables efficient O(1) inference in terms of memory and computation.

The demand for high performance under a limited power budget has led to the development of heterogeneous platforms. General-purpose processors have been accompanied by accelerators for improved performance and energy efficiency in highly parallel specific tasks such as encryption, signal processing and machine learning. While the improvements in inference efficiency enabled by Retentive Networks are certainly impressive, there is currently no public research on enabling their execution on embedded and constrained hardware.

Project

In this project, we aim to lay the foundation for a retention accelerator that is able to execute the main layers in Retentive Networks with little to no intervention of a host system. We further target the integration of said accelerator with the PULPissimo SoC system \cite{8640145} to enable a full-fledged system.

The main tasks of this project are:

- Familiarize Yourself with Retentive Networks: Familiarize with the recent work and the mathematical formulation of the multi-scale retention mechanism.

- Specification of the Accelerator Architecture: Develop block diagram of the accelerator architecture and integrate the system memory, interfaces, and processing elements.

- Implementation and Verification of the Accelerator RTL: Implement and verify the accelerator described in T2. A full implementation consists of not only the functional RTL describing the accelerator, but also of the design and implementation of testbenches allowing debugging of the system.

- Measurement and Evaluation of the Proposed System: Synthesize and place-and-route the accelerator to obtain key metrics like area and longest path. Perform simulation using practical input data and observe the power consumption using a post-layout simulation.

- (Stretch Goal) Integration of the Accelerator with the PULPissimo SoC: Integrate the accelerator with the PULPissimo SoC, allowing it to be used in practical applications. This includes designing the necessary interfaces and adapting the protocols to achieve full compatibility with the PULP ecosystem.

Character

- Literature/Architecture Review: 20% of the project will involve understanding the theoretical foundations and design principles of transformers, attention/retention mechanisms, and hardware-friendly softmax algorithms.

- RTL Implementation: The majority (50%) of the project will be dedicated to hands-on coding, where the student will implement a hardware accelerator for the retention mechanism.

- Evaluation: The final 30% will focus on systematically evaluating the algorithms' performance and analyzing the results.

Prerequisites

- Experience with digital design in SystemVerilog as taught in VLSI I

- Experience with ASIC implementation flow (synthesis) as taught in VLSI II

- Fundamental deep learning concepts.

- Numerical representation formats (integer, fixed-point, floating-point).

References

[2] I-BERT: Integer-only BERT Quantization

[4] An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale

[5] DALL·E 2

[6] Real-Time Target Sound Extraction

[7] Retentive Network: A Successor to Transformer for Large Language Models