Investigation of Quantization Strategies for Retentive Networks (1S)

From iis-projects

Contents

Overview

Status: In Progress

- Type: Semester Thesis

- Professor: Prof. Dr. L. Benini

- Student: Hannes Stählin

- Supervisors:

- Jannis Schönleber (IIS): janniss@iis.ee.ethz.ch

- Philip Wiese (IIS): wiesep@iis.ee.ethz.ch

- Victor Jung (IIS): jungvi@iis.ee.ethz.ch

Introduction

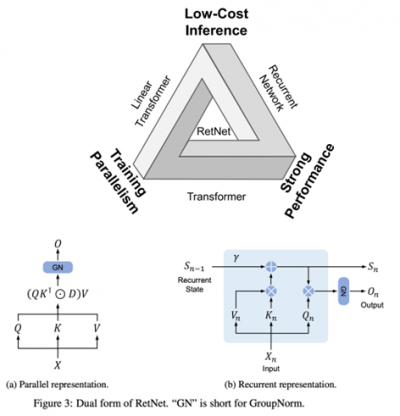

In the last few years, a new class of deep learning algorithm, the Transformer architecture [1], has emerged. Originally developed for natural language processing (NLP), Transformers are nowadays successfully applied to a wide range of problems such as text generation [2], text classification [3], image classification [4] and image generation [5], as well as audio processing [6]. However, the attention mechanism requires pair-wise computation between all elements of the input sequence, thus, scaling the number of operations quadratically with the sequence length. Additionally, the row-wise softmax function introduces complex data flow dependencies, hindering the ability to parallelize the training process. Retentive Networks [7] are a novel alternative to Transformer networks, achieving better training parallelism, low-cost inference and good performance. This is achieved by replacing the multi-head attention (MHA) with a multi-scale retention mechanism (MSR), replacing the softmax and enabling a parallel and recurrent formulation. The parallel representation empowers training parallelism to utilize GPU devices fully, whereas the recurrent representation enables efficient O(1) inference in terms of memory and computation.

Considering the trend for deploying complex neural network to embedded devices with strict power and memory constraints, quantization is an important optimization technique to reduce the cost of model inference by representing the weights and activations with a lower number of bits.

This allows for faster and more energy-efficient model inference at the cost of reduced accuracy.

In general, quantization can be achieved in two ways: post-training quantization

(PTQ) and quantization-aware training (QAT) [8].

The former is a technique where the weights and activations of a pre-trained neural network are quantized after the training process is complete.

This involves converting the floating-point values to integer values and optimizing the quantized model to minimize the loss in accuracy caused by quantization.

Alternatively, QAT is a process where a model is trained with the intention of being quantized afterward.

During training, the model is exposed to quantization effects by using lower-precision values for weights and activations, which enables the model to learn how to perform well under quantization constraints.

Project

The objective of this thesis is to investigate different quantization strategies for retentive networks and especially the multi-scale retention mechanism (MSR). To facilitate this process, we have developed QuantLab [9], a versatile and modular framework designed for training, comparing, and quantizing neural networks. The framework is based on PyTorch and supports sub-byte and mixed precision quantization. The core component of the framework, QuantLib, already includes several QAT algorithms, such as PACT [10] and TQT [11].

The main tasks of this project are:

- Familiarize Yourself with Retentive Networks: Familiarize with the recent work and the mathematical formulation of the multi-scale retention mechanism. This also includes training a retentive network in PyTorch.

- Extend QuantLib to Support Quantized MSR: Explore possible solutions to quantize the MSR operation and add support to QuantLib.

- Evaluate the Performance: Evaluate and compare the performance of the quantized and full-precision multi-scale retention operation, and repeat the process for a complete retentive network.

- (Stretch Goal) Deploy Retentive Networks: Add support for the quantized MSR to Deeploy, a tool developed at IIS that facilitates fully automated conversion, optimization, and deployment of neural networks to C programmable platforms.

Character

- Literature/Architecture Review: 10% of the project will involve understanding the theoretical foundations and design principles of transformers, attention/retention mechanisms, and quantization techniques.

- Python Coding: The majority (60%) of the project will be dedicated to hands-on coding, where the student will implement and experiment with various quantization algorithms.

- Evaluation: The final 30% will focus on systematically evaluating the performance of the quantized retention mechanism and analyzing the results.

Prerequisites

- Fundamental deep learning concepts.

- Numerical representation formats (integer, fixed-point, floating-point).

- Proficiency in Python programming.

- Familiarity with the PyTorch deep learning framework.

References

[2] I-BERT: Integer-only BERT Quantization

[4] An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale

[5] DALL·E 2

[6] Real-Time Target Sound Extraction

[7] Retentive Network: A Successor to Transformer for Large Language Models

[8] A Survey of Quantization Methods for Efficient Neural Network Inference

[9] QuantLab: a Modular Framework for Training and Deploying Mixed-Precision NNs

[10] PACT: Parameterized Clipping Activation for Quantized Neural Networks