Physical Implementation of ITA (2S)

From iis-projects

Contents

Overview

Status: Available

- Type: Semester Thesis

- Professor: Prof. Dr. L. Benini

- Supervisors:

Introduction

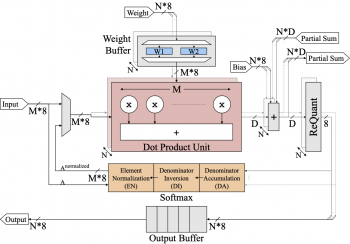

Transformers [1], initially popularized in Natural Language Processing (NLP), have found applications beyond and are now integral to a wide range of deep learning tasks. However, the efficient hardware acceleration of transformer models poses new challenges due to their high arithmetic intensities, large memory requirements, and complex dataflow dependencies. To solve this issue, we designed ITA, Integer Transformer Accelerator [2], that targets efficient transformer inference on embedded systems by exploiting 8-bit quantization and an innovative softmax implementation that operates exclusively on integer values.

ITA utilizes the parallelism of attention mechanism and 8-bit integer quantization to improve performance and energy efficiency. To maximize ITA’s energy efficiency, we focus on minimizing data movement throughout the execution cycle of the attention mechanism. In contrast to throughput-oriented accelerator designs, which typically employ systolic arrays, ITA implements its processing elements with wide dot-product units, allowing us to maximize the depth of adder trees, thereby further increasing efficiency.

To overcome the complex dataflow requirements of standard softmax, we present a novel approach that allows performing softmax on 8-bit integer quantized values directly in a streaming data fashion. This energy- and area-efficient softmax implementation fully operates in integer arithmetic with a footprint of only 3.3% over the total area of ITA and a mean absolute error of 0.46% compared to its floating-point implementation. Our approach also enables a weight stationary dataflow by decoupling denominator summation and division in softmax. The streaming softmax operation and weight stationary flow, in turn, minimize data movement in the system and power consumption.

ITA is evaluated in GlobalFoundaries’ 22FDX fully-depleted silicon-on-insulator (FD-SOI) technology and achieved an energy efficiency of 16.9 TOPS/W and area efficiency of 5.93 TOPS/mm2 at 0.8 V, performing similarly to the state-of-the-art in energy efficiency, despite being implemented in a much less aggressive technology, and 2× better in area efficiency.

This project aims to tape out a smaller version of ITA as a proof of concept.

Project

- Finalizing the RTL design: Familiarize yourself with the current state of the project and finalize the system integration of ITA. Rerun RTL simulations to verify your design.

- Synthesize & Place-and-Route: Setup and integrate the (most likely tsmc65) design flow into the project. Synthesize, place and route the design. Confirm your implementation with post-layout simulation.

- Prepare for the tape-out: Make sure that you are ready for a design review: http://eda.ee.ethz.ch/index.php?title=Design_review

Character

- Familiarizing with ITA: 20% of the project will involve understanding how ITA works and running simulations to verify functionality in the system.

- Physical implementation: The majority (60%) of the project will be dedicated to physical implementation, i.e. synthesis, place-and-route and design review.

- Evaluation: The final 20% will focus on evaluating the design and preparing the report and presentation.

Prerequisites

- Experience with digital design in SystemVerilog as taught in VLSI I

- Experience with ASIC implementation flow (synthesis) as taught in VLSI II

- Lite experience with C or comparable language for low-level SW glue code

References

[2] ITA: An Energy-Efficient Attention and Softmax Accelerator for Quantized Transformers