Accelerating Matrix Multiplication on a 216-core MPSoC (1M)

From iis-projects

Contents

Overview

Status: In progress

- Type: Master or Semester thesis

- Professor: Prof. Dr. L. Benini

- Supervisors:

- Student: Roger Barton rbarton@student.ethz.ch

Introduction

GEneral Matrix Multiplication (GEMM) is one of the fundamental operations at the core of many crucial workloads for modern computing, including scientific computing and deep learning. Finding optimal ways to schedule and parallelize GEMM is a hot topic since many years [1, 2] but there is no such thing as “one solution fits all” and implementations have to be adapted to newly proposed hardware features.

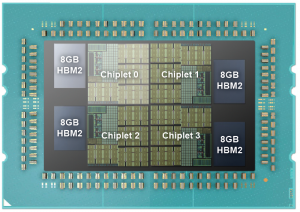

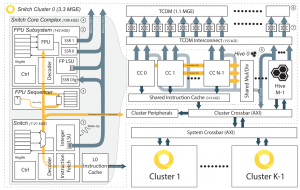

Occamy is a research prototype to demonstrate and explore the scalability, performance, and efficiency of our RISC-V-based architecture in a 2.5D integrated chiplet system. It is a realization of the Manticore concept architecture presented at the Hot Chips 2020 conference [5]. It features 432 cores, 216 per chiplet, grouped in clusters of 8 cores each. Every cluster contains a 128 KB tightly-coupled data memory (TCDM) and eight small and super-efficient, in-order, 32-bit RISC-V integer core called Snitch [3]. Every Snitch core is coupled with a large multi-precision capable floating-point unit (FPU) enhanced with single instruction multiple data (SIMD) capabilities. To achieve ultra-efficient computation on data-parallel FP workloads, two custom architectural extensions are exploited: data-prefetchable register file entries and repetition buffers. The corresponding RISC-V ISA extensions – stream semantic registers (SSRs) [4] and FP repetition instructions (FREP) [3] – enable the Snitch core to achieve FPU utilization higher than 90% for compute-bound kernels. Occamy's interconnect and memory subsystem is also optimized to ensure that the processing elements can be kept busy and that the operation is not memory-bound. To this end, every cluster is equipped with a DMA engine connected to a wide 512-bit interconnect, which allows to transfer data quickly between clusters on-chip. The interconnect is further enhanced with multicast capabilities, enabling one cluster to quickly send data to multiple other clusters simultaneously.

Occamy’s unique architecture opens up new opportunities to accelerate GEMM, by leveraging its unique hardware extensions and distributed memory design.

Project description

In this project, you will study and implement different parallelization schemes for GEMM on Occamy and build an automatic tiler based on constraint programming and modelling. You will strive to achieve the best possible GEMM implementation on Occamy for a variety of problem sizes (with the help of the auto-tiler) and compare your results on Occamy to the SotA performance on traditional architectures.

Detailed task description

To break it down in more detail, you will:

- Review the literature on GEMM implementations

- Understand I/O optimality bounds and scheduling implications

- Understand the workings of SotA implementations (Goto's algorithm and CAKE [1])

- Review the Occamy architecture

- Develop a highly optimized multi-cluster GEMM kernel

- Optimize single-thread performance: SSRs, FREP, minifloats, hand-optimized assembly (you will review an existing implementation)

- Parallelize over multiple cores in a cluster (you will review an existing implementation)

- Optimize data movement from DRAM to the L1 memory

- Parallelize over multiple clusters: explore cluster-to-cluster communication and use of multicast extension

- Model the performance of your kernel for arbitrary problem sizes

- Develop an automated deployment solution for GEMM on Occamy based on your model

- Evaluate your work

- Evaluate the accuracy of your performance model

- Evaluate the performance of implementations produced by your tiler on Occamy against other SotA architectures

Stretch goals

Additional optional stretch goals may include:

- Generalize your work to a wider selection of kernels exhibiting similar characteristics to GEMM

Character

- 20% Literature/architecture review

- 30% Bare-metal software development

- 10% Performance modeling

- 20% Auto-tiler development in Python

Prerequisites

- Ability to understand RTL code and navigate simulation waveforms

- Experience in bare-metal or embedded C programming

- Experience in parallel programming (e.g. to the level taught in the SoCDAML class)

References

[1] CAKE: Matrix Multiplication Using Constant-Bandwidth Blocks

[2] Theory and Practice of Classical Matrix-Matrix Multiplication for Hierarchical Memory Architectures

[3] Snitch: A Tiny Pseudo Dual-Issue Processor for Area and Energy Efficient Execution of Floating-Point Intensive Workloads

[4] Stream Semantic Registers: A Lightweight RISC-V ISA Extension Achieving Full Compute Utilization in Single-Issue Cores

[5] Manticore: A 4096-Core RISC-V Chiplet Architecture for Ultraefficient Floating-Point Computing

[6] Red-Blue Pebbling Revisited: Near Optimal Parallel Matrix-Matrix Multiplication

[7] TSM2X: High-Performance Tall-and-Skinny Matrix-Matrix Multiplication on GPUs

[8] A coordinated tiling and batching framework for efficient GEMM on GPUs

[9] Coordinated DMA: Improving the DRAM Access Efficiency for Matrix Multiplication