Difference between revisions of "Bringing XNOR-nets (ConvNets) to Silicon"

From iis-projects

(Created page with "600px|thumb 500px|thumb 400px|thumb ==Short Description== Imaging sensor networks, UAVs, smartphones,...") |

m |

||

| Line 8: | Line 8: | ||

We have built an accelerator for this, Origami, which has been very successful, and we have moved towards lower-resolution implementations with our YodaNN implementation. Energy-efficiency is critical to bring ConvNets to mobile devices, and I/O is limiting how far we can go. With fully-binary ConvNets, this could be improved by requiring only ~1bit/pixel instead of ~12bit/pixel like before. The current state-of-the-art in this direction are XNOR-nets, but we want to understand them better and use their structure to build an even more efficient ConvNet accelerator with almost no multipliers and relatively small adders. | We have built an accelerator for this, Origami, which has been very successful, and we have moved towards lower-resolution implementations with our YodaNN implementation. Energy-efficiency is critical to bring ConvNets to mobile devices, and I/O is limiting how far we can go. With fully-binary ConvNets, this could be improved by requiring only ~1bit/pixel instead of ~12bit/pixel like before. The current state-of-the-art in this direction are XNOR-nets, but we want to understand them better and use their structure to build an even more efficient ConvNet accelerator with almost no multipliers and relatively small adders. | ||

| − | ===Status: | + | ===Status: In Progress=== |

: 1-2 Master thesis or 2-3 semester project students | : 1-2 Master thesis or 2-3 semester project students | ||

: Supervision: [[:User:Lukasc | Lukas Cavigelli]], [[:User:Andrire| Renzo Andri]] | : Supervision: [[:User:Lukasc | Lukas Cavigelli]], [[:User:Andrire| Renzo Andri]] | ||

| − | [[Category:Digital]] [[Category:FPGA]] [[Category:ASIC]] [[Category: | + | [[Category:Digital]] [[Category:FPGA]] [[Category:ASIC]] [[Category:In progress]] [[Category:Semester Thesis]] [[Category:Master Thesis]] [[Category:2016]] [[Category:Hot]] |

===Prerequisites=== | ===Prerequisites=== | ||

Revision as of 18:50, 12 December 2016

Contents

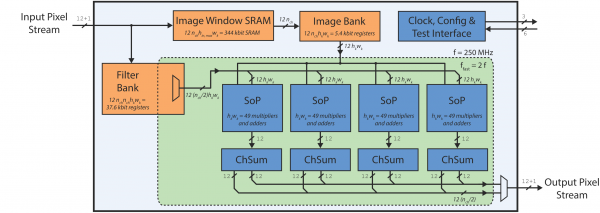

Short Description

Imaging sensor networks, UAVs, smartphones, and other embedded computer vision systems require power-efficient, low-cost and high-speed implementations of synthetic vision systems capable of recognizing and classifying objects in a scene. Many popular algorithms in this area require the evaluations of multiple layers of filter banks. Almost all state-of-the-art synthetic vision systems are based on features extracted using multi-layer convolutional networks (ConvNets). When evaluating ConvNets, most of the time is spent performing the convolutions (80% to 90%).

We have built an accelerator for this, Origami, which has been very successful, and we have moved towards lower-resolution implementations with our YodaNN implementation. Energy-efficiency is critical to bring ConvNets to mobile devices, and I/O is limiting how far we can go. With fully-binary ConvNets, this could be improved by requiring only ~1bit/pixel instead of ~12bit/pixel like before. The current state-of-the-art in this direction are XNOR-nets, but we want to understand them better and use their structure to build an even more efficient ConvNet accelerator with almost no multipliers and relatively small adders.

Status: In Progress

- 1-2 Master thesis or 2-3 semester project students

- Supervision: Lukas Cavigelli, Renzo Andri

Prerequisites

- Interest in VLSI architecture exploration and computer vision

- VLSI 1 or equivalent

Character

- 15% Theory / Literature Research

- 15% Software Evaluations

- 70% VLSI Architecture, Implementation & Verification

Professor

Detailed Task Description

A detailed task description will be worked out right before the project, taking the student's interests and capabilities into account.

Literature

- Hardware Acceleration of Convolutional Networks:

- Andri, R., Cavigelli, L., Rossi, D., & Benini, L. (2016). YodaNN: An Ultra-Low Power Convolutional Neural Network Accelerator Based on Binary Weights. arXiv preprint arXiv:1606.05487. [1]

- Lukas Cavigelli, David Gschwend, Christoph Mayer, Samuel Willi, Beat Muheim, Luca Benini, "Origami: A Convolutional Network Accelerator", Proc. ACM/IEEE GLS-VLSI'15 [2] [3]

- F. Conti, L. Benini, "A Ultra-Low-Energy Convolution Engine for Fast Brain-Inspired Vision in Multicore Clusters", Proc. ACM/IEEE DATE'15 [4]

- Yu-Hsin Chen, Tushar Krishna, Joel Emer, Vivienne Sze, "Eyeriss: An Energy-Efficient Reconfigurable Accelerator for Deep Convolutional Neural Networks", Proc. ISSCC'16.

- C. Farabet, B. Martini, B. Corda, P. Akselrod, E. Culurciello and Y. LeCun, "NeuFlow: A Runtime Reconfigurable Dataflow Processor for Vision", Proc. IEEE ECV'11@CVPR'11 [5]

- V. Gokhale, J. Jin, A. Dundar, B. Martini and E. Culurciello, "A 240 G-ops/s Mobile Coprocessor for Deep Neural Networks", Proc. IEEE CVPRW'14 [6]

- Chen Zhang, Peng Li, Guangyu Sun, Yijin Guan, Bingjun Xiao, Jason Cong, "Optimizing FPGA-based Accelerator Design for Deep Convolutional Neural Networks", Proc. FPGA'15 [7]

- Rastegari, M., Ordonez, V., Redmon, J. and Farhadi, A., 2016. XNOR-Net: ImageNet Classification Using Binary Convolutional Neural Networks. arXiv preprint arXiv:1603.05279. [8]

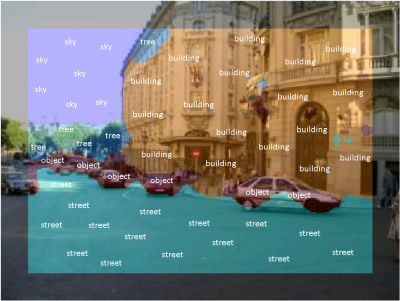

- L. Cavigelli, M. Magno, L. Benini, "Accelerating real-time embedded scene labeling with convolutional networks", Proc. ACM/IEEE/EDAC DAC'15, [9]