Streaming Layer Normalization in ITA (M/1-2S)

From iis-projects

Contents

Overview

Status: Reserved

- Type: Master Thesis

- Professor: Prof. Dr. L. Benini

- Supervisors:

Introduction

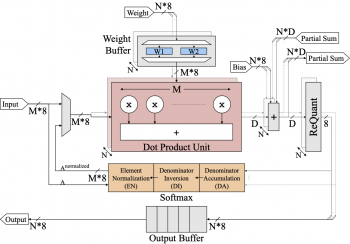

Transformers [1], initially popularized in Natural Language Processing (NLP), have found applications beyond and are now integral to a wide range of deep learning tasks. However, the efficient hardware acceleration of transformer models poses new challenges due to their high arithmetic intensities, large memory requirements, and complex dataflow dependencies. To solve this issue, we designed ITA, Integer Transformer Accelerator [2], that targets efficient transformer inference on embedded systems by exploiting 8-bit quantization and an innovative softmax implementation that operates exclusively on integer values.

ITA utilizes the parallelism of attention mechanism and 8-bit integer quantization to improve performance and energy efficiency. To maximize ITA’s energy efficiency, we focus on minimizing data movement throughout the execution cycle of the attention mechanism. In contrast to throughput-oriented accelerator designs, which typically employ systolic arrays, ITA implements its processing elements with wide dot-product units, allowing us to maximize the depth of adder trees, thereby further increasing efficiency.

To overcome the complex dataflow requirements of standard softmax, we present a novel approach that allows performing softmax on 8-bit integer quantized values directly in a streaming data fashion. This energy- and area-efficient softmax implementation fully operates in integer arithmetic with a footprint of only 3.3% over the total area of ITA and a mean absolute error of 0.46% compared to its floating-point implementation. Our approach also enables a weight stationary dataflow by decoupling denominator summation and division in softmax. The streaming softmax operation and weight stationary flow, in turn, minimize data movement in the system and power consumption.

Currently, ITA only accelerates the attention part of a transformer block. In this project, you will add a layer normalization (LayerNorm) module to ITA to enable the acceleration of FFN layers as well. Layer normalization will be implemented in a streaming mode similar to softmax.

Project

- Familiarize yourself with ITA and propose the modified architecture with a layer normalization unit.

- Implement the RTL of your LayerNorm unit and integrate it into ITA.

- Evaluate your design in terms of performance, energy and area and compare it with the original ITA.

- Stretch Goal: Integrate your streaming LayerNorm implementation into the PyTorch framework.

Character

- 20% Architecture review

- 50% RTL implementation

- 30% Evaluation

Prerequisites

- Fundamental deep learning concepts.

- Experience with digital design in SystemVerilog as taught in VLSI I.

- Proficiency in Python programming and familiarity with the PyTorch deep learning framework.

References

[2] ITA: An Energy-Efficient Attention and Softmax Accelerator for Quantized Transformers