Difference between revisions of "Extending the HERO RISC-V HPC stack to support multiple devices on heterogeneous SoCs (M/1-3S)"

From iis-projects

| (2 intermediate revisions by the same user not shown) | |||

| Line 41: | Line 41: | ||

<syntaxhighlight lang=C> | <syntaxhighlight lang=C> | ||

| + | #pragma omp task | ||

| + | init_data(a); | ||

| − | + | #pragma omp target map(to:a[:N]) map(from:x[:N]) nowait | |

| − | + | compute_1(a, x, N); | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | #pragma omp target map(to:b[:N]) map(from:z[:N]) nowait | |

| − | + | compute_3(b, z, N); | |

| + | |||

| + | #pragma omp target map(to:y[:N]) map(to:z[:N]) nowait | ||

| + | compute_4(z, x, y, N); | ||

| + | |||

| + | #pragma omp taskwait | ||

</syntaxhighlight> | </syntaxhighlight> | ||

| + | [https://www.openmp.org/wp-content/uploads/2021-10-20-Webinar-OpenMP-Offload-Programming-Introduction.pdf OpenMP Offload Programming] | ||

This will rely on the multiple pre-existing hero libraries and drivers: | This will rely on the multiple pre-existing hero libraries and drivers: | ||

| Line 66: | Line 64: | ||

== Character == | == Character == | ||

| − | * 20% Study the LLVM project and | + | * 20% Study the LLVM project, HeroSDK, and existing asynchronous offloading implementation (example Cuda) |

* 20% Get familiar with the SoC architecture and its FPGA implementation | * 20% Get familiar with the SoC architecture and its FPGA implementation | ||

| − | * 60% Propose and implement the runtime libraries extensions (written in C) | + | * 60% Propose and implement the runtime libraries extensions (written in C) for asynchronous offloading |

| − | |||

== Prerequisites == | == Prerequisites == | ||

| Line 75: | Line 72: | ||

* Good knowledge of computer architectures | * Good knowledge of computer architectures | ||

* Proficient in C, knowledge of C++ | * Proficient in C, knowledge of C++ | ||

| − | * Willing to learn about Linux and Linux drivers | + | * Willing to learn about Linux and Linux drivers |

= References = | = References = | ||

Latest revision as of 12:58, 22 May 2024

Overview

Status: Available

- Type: Computer Architecture Master / Semester Thesis

- Professor: Prof. Dr. L. Benini

- Supervisors:

Introduction

OpenMP is an Application Programming Interface (API) that supports multi-platform shared-memory multiprocessing programming in C, C++, and Fortran. OpenMP allows developers to write parallel programs that can run on a wide range of hardware, including multi-core processors and symmetric multiprocessing (SMP) systems. OpenMP uses pragma directives to exploit parallelism in the annotated code regions. These directives are embedded in the source code and guide the compiler in generating parallel executable code. In addition to compiler support, an OpenMP runtime library abstracts the details of thread creation and management from the programmer, simplifying the parallelization process.

Starting from version 4.0, OpenMP introduced a target directive, which allows offloading computations to accelerators by explicitly specifying the code regions amenable to execute on the accelerator.

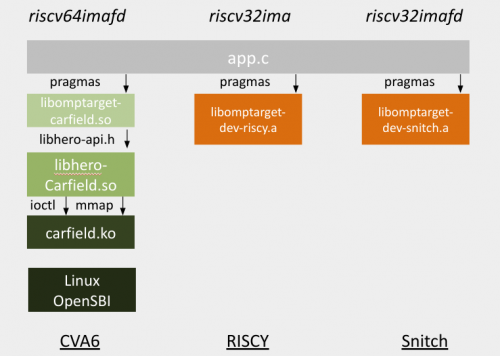

The HERO stack, developed at IIS, proposes an implementation of the OpenMP runtime that can run on multiple of our SoCs with a maximum of code reuse.

HERO is today capable of compiling separate applications for different devices, but OpenMP does not support offloading to different devices within the same application.

Project

The goal of the project is to extend the HERO software stack and toolchain to allow offloading from one host running Linux to multiple devices simultaneously.

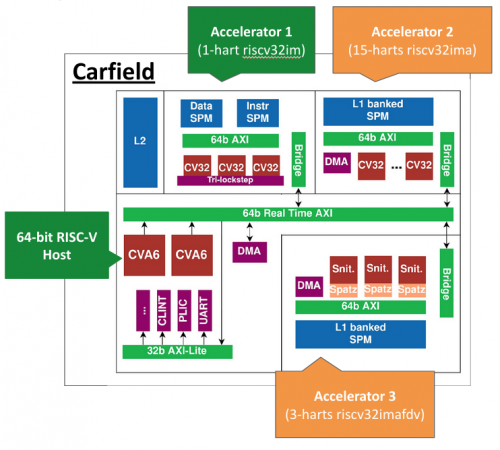

The proposed extension of HERO will be tested on our latest (emulated) heterogeneous platform.

The project aims to enable the following code:

#pragma omp task

init_data(a);

#pragma omp target map(to:a[:N]) map(from:x[:N]) nowait

compute_1(a, x, N);

#pragma omp target map(to:b[:N]) map(from:z[:N]) nowait

compute_3(b, z, N);

#pragma omp target map(to:y[:N]) map(to:z[:N]) nowait

compute_4(z, x, y, N);

#pragma omp taskwait

This will rely on the multiple pre-existing hero libraries and drivers:

Character

- 20% Study the LLVM project, HeroSDK, and existing asynchronous offloading implementation (example Cuda)

- 20% Get familiar with the SoC architecture and its FPGA implementation

- 60% Propose and implement the runtime libraries extensions (written in C) for asynchronous offloading

Prerequisites

- Good knowledge of computer architectures

- Proficient in C, knowledge of C++

- Willing to learn about Linux and Linux drivers