Flexfloat DL Training Framework

From iis-projects

The printable version is no longer supported and may have rendering errors. Please update your browser bookmarks and please use the default browser print function instead.

Short Description

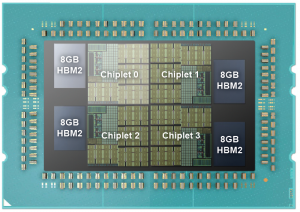

So far, we have implemented inference of various smaller networks on our PULP-based systems ([pulp-nn]). The data-intensive training of DNN was too memory-hungry to be implemented on our systems. Our latest architecture concept called Manticore includes 4096 snitch cores, is chiplet-based and includes HBM2 memory (see Image).

Recently, industry and academia have started exploring the required computational precision for training. Many state-of-the-art training hardware platforms support by now not only 64-bit and 32-bit floating-point formats, but also 16-bit floating-point formats (binary16 by IEEE and brainfloat). Recent work proposes various training formats such as 8-bit floats.

Status: Available

- Looking for 1 Semester/Master student

- Contact: Gianna Paulin, Tim Fischer

Prerequisites

- Machine Learning

- Python

- C

Prerequisites

- Machine Learning

- Python

- C

Character

- 25% Theory

- 75% Implementation