A Snitch-based Compute Accelerator for HERO (M/1-2S)

From iis-projects

Contents

Overview

Status: Completed

- Type: Semester Thesis

- Semester: Autumn Semester 2020

- Student: Noah Hütter

- Start: September 14, 2020

- Professor: Prof. Dr. L. Benini

- Supervisors:

Character

- 10% Literature / existing work exploration

- 20% RTL

- 20% Verification

- 30% Implementation

- 20% Software / Tooling

Prerequisites

- VLSI I or equivalent: Understanding of at least one RTL language and FPGA design principles.

- Basic prior knowledge of embedded / bare-metal C and Assembly

- Preferred: prior experience writing RTL or low-level C

- Preferred: prior experience with Xilinx Zynq platform

Introduction

Heterogeneous Systems on Chip (HESoCs) often couple a high-performance versatile host processor, capable of handling fully-fledged operating systems running standard software, to programmable manycore accelerators (PMCAs), composed of clusters of simpler cores to tackle large, parallel compute workloads. This setup enables both high performance and high energy efficiency for a wide variety of tasks.

At IIS, our group developed the open-source Parallel Ultra-Low Power (PULP) platform [1] , which provides a multicore cluster based on the open RISC-V instruction set architecture (ISA). PULP targets maximum energy efficiency for embedded applications with heavier workloads, like computer vision tasks for unmanned aerial vehicles (UAVs) and machine learning inference.

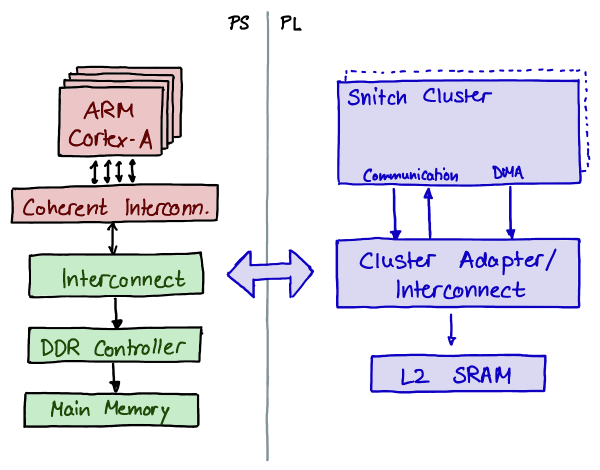

We also developed an open FPGA-based HESoC research platform called HERO [2] : it combines a multicore ARM host with a PULP cluster as an accelerator, though both components are meant to be exchangeable. HERO features a shared virtual memory system between host and accelerator and provides a heterogeneous compiler toolchain with OpenMP support for acceleration; this keeps the programming model simple and portable and enables accelerating state-of-the-art software and benchmarks.

An attractive implementation target for HERO are Xilinx’ Zynq MPSoCs [3] : They provide a fully-fledged hardwired ARM SoC, acting as a host, coupled to an FPGA fabric implementing the accelerator.

![The Snitch cluster in its default configuration [4]](/images/thumb/4/4a/Snitch-bd.png/600px-Snitch-bd.png)

Alongside PULP and its cluster, we recently introduced the Snitch ecosystem, which targets energy-efficient high-performance systems. Snitch is a minimal RISC-V core, only 15 kGE in size. It can optionally be coupled to an FPU or a DMA, with further DSP extensions in development. Multiple cores, peripherals, and a tightly-coupled memory form a Snitch cluster as shown in 2.

In contrast to PULP and its RI5CY core, Snitch is a single-stage core and its cluster more modular and configurable; it is tuned for high performance by default, offering eight 64-bit instead of two 32-bit FPUs, a wider DMA, and extensions aiming to maximize FPU utilization.

To accelerate hardware exploration, simplify software evaluation, and arrive at a mature heterogeneous software ecosystem for Snitch, we want to add the Snitch cluster to the HERO family and integrate it as an accelerator.

Project Description

![Zynq UltraScale+ family MPSoC top-level block diagram [3]](/images/thumb/f/f8/Ug1085-zynq-ultrascale-top.png/600px-Ug1085-zynq-ultrascale-top.png)

The core goal of the project is to implement a HERO system on a Zynq UltraScale+ family MPSoC, presented in 3, and integrate a Snitch cluster as its accelerator, optimizing its configuration and connection to the ARM Host with respect to the available fabric resources.

Milestones

The following are the milestones that we expect to achieve throughout the project:

- Familiarize yourself with both the Snitch Cluster and HERO ecosystem, as well as their respective tools and workflows. Run some code in simulation on the snitch cluster and set up a Linux environment on the Zynq host.

- Explore the design space to find an adequate cluster configuration which loosely fits on the Zynq’s Programmable Logic (PL). Integrate such a cluster on the Zynq and connect it to the Processing System (PS); use the preexisting PULP-based HERO SoC as a guideline.

- Write a simple application that successfully demonstrates the necessary functionalities of a copy-based host-accelerator paradigm by:

- offloading code and data to the cluster accelerator

- launching and monitoring execution

- transferring back results

Stretch Goals

Should the above milestones be reached earlier than expected and you are motivated to do further work, we propose the following stretch goals to aim for:

- Reusing as much of preexisting HERO tooling as possible, evaluate and optimize more ambitious, highly parallel compute workloads such as machine learning layers or linear algebra kernels.

- Adapt the existing heterogeneous cross-compiler toolchain with OpenMP support to your new system; use it to further evaluate your system using state-of-the-art benchmark suites or general-purpose software.

- The Snitch cluster has a much higher peak memory throughput than the existing PULP cluster; reevaluate and optimize the data movement between host and cluster to maximize performance, making optimal use of existing hard-wired MPSoC data-mover infrastructure.

- Extend the accelerator to two or more clusters, reexploring the design space.

- Extend and improve the SoC in other ways, for example by tuning the cluster design or adding peripherals.

Project Realization

Time Schedule

The time schedule presented in 1 is merely a proposition; it is primarily intended as a reference and an estimation of the time required for each required step.

| Project phase | Time estimate |

|---|---|

| Get familiar with Snitch and HERO | 2 weeks |

| Explore and integrate Cluster | 4 weeks |

| Develop demonstrator | 3 weeks |

| Stretch goals | remaining time |

| Write report | 2 weeks |

| Prepare presentation | 1 week |

Meetings

Weekly meetings will be held between the student and the assistants. The exact time and location of these meetings will be determined within the first week of the project in order to fit the student’s and the assistants’ schedule. These meetings will be used to evaluate the status and progress of the project. Beside these regular meetings, additional meetings can be organized to address urgent issues as well.

Weekly Reports

The student is advised, but not required, to a write a weekly report at the end of each week and to send it to his advisors. The idea of the weekly report is to briefly summarize the work, progress and any findings made during the week, to plan the actions for the next week, and to bring up open questions and points. The weekly report is also an important means for the student to get a goal-oriented attitude to work.

HDL Guidelines

Naming Conventions

Adapting a consistent naming scheme is one of the most important steps in order to make your code easy to understand. If signals, processes, and entities are always named the same way, any inconsistency can be detected easier. Moreover, if a design group shares the same naming convention, all members would immediately feel at home with each others code. The naming conventions we follow in this project are at https://github.com/lowRISC/style-guides/.

Report

Documentation is an important and often overlooked aspect of engineering. A final report has to be completed within this project.

The common language of engineering is de facto English. Therefore, the final report of the work is preferred to be written in English.

Any form of word processing software is allowed for writing the reports, nevertheless the use of LaTeX with Inkscape or any other vector drawing software (for block diagrams) is strongly encouraged by the IIS staff.

If you write the report in LaTeX, we offer an instructive, ready-to-use template, which can be forked from the Git repository at https://iis-git.ee.ethz.ch/akurth/iisreport.

Final Report

The final report has to be presented at the end of the project and a digital copy needs to be handed in and remain property of the IIS. Note that this task description is part of your report and has to be attached to your final report.

Presentation

There will be a presentation (15 min presentation and 5 min Q&A) at the end of this project in order to present your results to a wider audience. The exact date will be determined towards the end of the work.

Deliverables

In order to complete the project successfully, the following deliverables have to be submitted at the end of the work:

- Final report incl. presentation slides

- Source code and documentation for all developed software and hardware

- Testsuites (software) and testbenches (hardware)

- Synthesis and implementation scripts, results, and reports

References

[1] F. Conti, D. Rossi, A. Pullini, I. Loi, and L. Benini, “PULP: A ultra-low power parallel accelerator for energy-efficient and flexible embedded vision,” J. Signal Process. Syst., vol. 84, no. 3, pp. 339–354, Sep. 2016.

[2] A. Kurth, A. Capotondi, P. Vogel, L. Benini, and A. Marongiu, “HERO: An open-source research platform for hw/sw exploration of heterogeneous manycore systems,” in ANDARE ’18, 2018.

[3] Xilinx, “Zynq UltraScale+ Device: Technical Reference Manual.” https://www.xilinx.com/support/documentation/user_guides/ug1085-zynq-ultrascale-trm.pdf, 2019.

[4] F. Zaruba, F. Schuiki, T. Hoefler, and L. Benini, “Snitch: A 10 kGE Pseudo Dual-Issue Processor for Area and Energy Efficient Execution of Floating-Point Intensive Workloads.” 2020.

<references />