Efficient collective communications in FlooNoC (1M)

From iis-projects

Contents

Overview

Status: In progress

- Type: Master Thesis

- Professor: Prof. Dr. L. Benini

- Supervisors:

- Student: Mingrui Yuan minyuan@student.ethz.ch

Introduction

To realize the performance potential of many-core architectures, efficient and scalable on-chip communication is required [1]. Collective communication lies on the critical path for many applications; the criticality of such communication is evident in the dedicated collective and barrier networks employed in several supercomputers, such as Summit [2], NYU Ultracomputer, Cray T3D and Blue Gene/L. Likewise, many-core architectures would benefit from hardware support for collective communications but may not be able to afford separate, dedicated networks due to routing and area costs. For this reason, several papers in literature explored the integration of collective communication support directly into the existing NoC [1, 3].

Collective communication operations are said to be "rooted" (or "asymmetric") when a specific node (the root) is either the sole origin (or producer) of data to be redistributed or the sole destination (or consumer) of data or results contributed by the nodes involved in the communication. Conversely, in "non-rooted" (or "symmetric") operations all nodes contribute and receive data. [6]

Our focus is on rooted operations where the root node exchanges a single datum, i.e. multicast (when the root is a producer) and reduction (when the root is a consumer) operations.

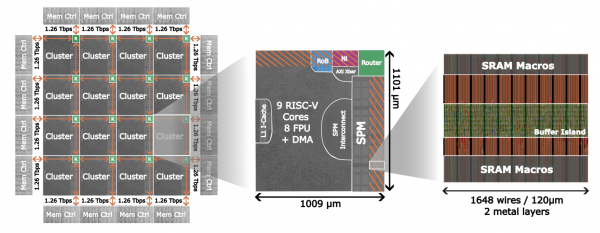

In two previous works, we explored the cost of integrating respectively multicast and reduction support directly into the interconnect of a shared-memory many-core system called Occamy [4]. In Occamy, 216+1 cores and their tightly-coupled data memories are interconnected by a hierarchy of AXI XBARs [5] in a partitioned global address space (PGAS). Each AXI XBAR interconnects a set of AXI masters with a set of AXI slaves, enabling unicast communication between any master and any slave. Our work involved extending the AXI XBAR to multicast transactions from an AXI master to multiple AXI slaves and to reduce data sent from multiple masters to a single slave.

However, using an interconnect with a hierarchy of AXI XBARs is very limiting in scalability. AXI was not intended for large systems with a deep hierarchy, where master and slave communicate with each other over many "hops". Occamy is a good example that shows the limits of what can be achieved with an AXI interconnect (30% of the area was dedicated to the AXI interconnect). An actual Network-on-Chip solves that issues, which is why after the lessons learnt in Occamy, we started working on FlooNoC [7]. In this thesis, we want to extend FlooNoC with the same multicast and reduction operations of the previous works on the AXI XBAR

Project description

In this thesis you will implement hardware support for multicast and reduction operations in FlooNoC, an open-source versatile AXI-based network-on-chip architecture which was developed in our group. You will evaluate the PPA impact of your extension and benchmark it on some common computational workloads (e.g. GEMM).

Detailed task description

To break it down in more detail, you will:

- Review previous work:

- Literature review on collective communication support in NoCs

- Familiarize with the multicast and reduction extensions for the AXI XBAR

- Implement support for multicast and reduction operations in FlooNoC:

- Extend the testbench and verification infrastructure to verify the design

- Plan and carry out RTL modifications

- Explore PPA overheads and correlate them with your RTL changes

- Iterate and improve PPA of the design

- System integration and evaluation:

- Extend the FlooNoC generator to support your extensions

- Extend our FlooNoC-based Occamy system-on-chip to support your extensions

- Extend our implementations of several common parallel programming primitives (e.g. barrier) and computational workloads (e.g GEMM) to use your extensions

- Evaluate the performance gains from your extensions in the FlooNoC-based Occamy system-on-chip

- Compare the scaling behaviour of the kernels (w/ and w/o your extensions) with the size of the system

Stretch goals

Additional optional stretch goals may include:

- Evaluate your extensions on synthetic traffic patterns

- Extend our OpenMP runtime to make use of your extensions

- Measure how your extension improves the overall runtime of real-world OpenMP workloads

Character

- 10% Literature/architecture review

- 50% RTL design and verification

- 30% Physical design exploration

- 10% Bare-metal software development

Prerequisites

- Strong interest in computer architecture

- Experience with digital design in SystemVerilog as taught in VLSI I

- Experience with ASIC implementation flow as taught in VLSI II

- Preferred: Experience in bare-metal or embedded C programming

References

[1] Supporting Efficient Collective Communication in NoCs

[2] The high-speed networks of the Summit and Sierra supercomputers

[3] Towards the ideal on-chip fabric for 1-to-many and many-to-1 communication

[4] Occamy many-core chiplet system

[5] PULP platform's AXI XBAR IP documentation

[6] Encyclopedia of Parallel Computing: Collective Communication entry

[7] FlooNoC: A Fast, Low-Overhead On-chip Network