Difference between revisions of "Hardware Exploration of Shared-Exponent MiniFloats (M)"

From iis-projects

Lbertaccini (talk | contribs) |

Lbertaccini (talk | contribs) |

||

| Line 1: | Line 1: | ||

| − | <!-- | + | <!-- Hardware Exploration of Shared-Exponent MiniFloats (M) --> |

[[Category:Digital]] | [[Category:Digital]] | ||

Revision as of 15:45, 15 February 2024

Contents

Overview

Status: Available

- Type: Master Thesis

- Professor: Prof. Dr. L. Benini

- Supervisors:

Introduction

Low-precision floating-point (FP) formats are getting more and more traction in the context of neural network (NN) training. Employing low-precision formats, such as 8-bit FP data types, reduces the model's memory footprint and opens new opportunities to increase the system's energy efficiency. While many commercial platforms already provide support for 8-bit FP data types, introducing lower-than-8bit formats is key to facing the memory footprint and efficiency requirements that ever-larger NN models introduce.

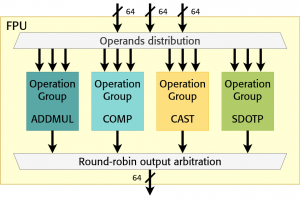

FP unit (FPU) developed at IIS [1], [2] already provide hardware support for low-precision FP formats (down to 8 bits). The goal of this project is to explore less-than-8b FP formats with a particular emphasis on shared-exponent MiniFloats [3]. Such formats use a shared exponent for N less-than-8bit values and are currently being researched by many hardware providers [3].

Character

- 20% Literature / architecture review

- 40% RTL implementation

- 40% Evaluation

Prerequisites

- Strong interest in computer architecture

- Experience with digital design in SystemVerilog as taught in VLSI I

- Experience with ASIC implementation flow (synthesis) as taught in VLSI II

References

[1] https://arxiv.org/abs/2207.03192 MiniFloat-NN and ExSdotp: An ISA Extension and a Modular Open Hardware Unit for Low-Precision Training on RISC-V cores