Difference between revisions of "Rethinking our Convolutional Network Accelerator Architecture"

From iis-projects

(Created page with "500px|thumb 400px|thumb ==Short Description== Imaging sensor networks, UAVs, smartphones, and other embedded computer vision s...") |

m |

||

| (14 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

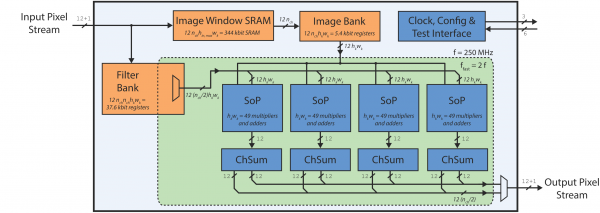

| + | [[File:Origami-top.png|600px|thumb]] | ||

[[File:x1-adas.jpg|500px|thumb]] | [[File:x1-adas.jpg|500px|thumb]] | ||

[[File:Labeled-scene.png|400px|thumb]] | [[File:Labeled-scene.png|400px|thumb]] | ||

==Short Description== | ==Short Description== | ||

| − | Imaging sensor networks, UAVs, smartphones, and other embedded computer vision systems require power-efficient, low-cost and high-speed implementations of synthetic vision systems capable of recognizing and classifying objects in a scene. Many popular algorithms in this area require the evaluations of multiple layers of filter banks. Almost all state-of-the-art synthetic vision systems are based on features extracted using multi-layer convolutional networks (ConvNets). When evaluating ConvNets, most of the time is spent performing the convolutions (80% to 90%). We have built | + | Imaging sensor networks, UAVs, smartphones, and other embedded computer vision systems require power-efficient, low-cost and high-speed implementations of synthetic vision systems capable of recognizing and classifying objects in a scene. Many popular algorithms in this area require the evaluations of multiple layers of filter banks. Almost all state-of-the-art synthetic vision systems are based on features extracted using multi-layer convolutional networks (ConvNets). When evaluating ConvNets, most of the time is spent performing the convolutions (80% to 90%). We have built an accelerator for this, Origami, which has been very successful. Nevertheless, it has some limitations such as the synthesis-time fixed filter sizes and room for improvements in terms of energy efficiency. We are looking for your creativity to completely (or also incrementally) rethink the architecture to make it more versatile and energy efficient. |

| − | ===Status: Available=== | + | ===Status: Not Available=== |

: 1-2 Master thesis or 1-3 semester project students | : 1-2 Master thesis or 1-3 semester project students | ||

: Supervision: [[:User:Lukasc | Lukas Cavigelli]] | : Supervision: [[:User:Lukasc | Lukas Cavigelli]] | ||

| − | [[Category:Digital]] [[Category:FPGA]] [[Category:ASIC]] [[Category: | + | [[Category:Digital]] [[Category:FPGA]] [[Category:ASIC]] [[Category:Not available]] [[Category:Semester Thesis]] [[Category:Master Thesis]] |

===Prerequisites=== | ===Prerequisites=== | ||

| Line 23: | Line 24: | ||

==Detailed Task Description== | ==Detailed Task Description== | ||

| − | + | A detailed task description will be worked out right before the project, taking the student's interests and capabilities into account. | |

| + | <!-- | ||

===Goals=== | ===Goals=== | ||

The goals of this project are | The goals of this project are | ||

| Line 29: | Line 31: | ||

* to explore various architectures to perform the 2D convolutions used in convolutional networks energy-efficienctly in frequency domain, considering the constraints of an ASIC design, and performing fixed-point analyses for the most viable architecture(s) | * to explore various architectures to perform the 2D convolutions used in convolutional networks energy-efficienctly in frequency domain, considering the constraints of an ASIC design, and performing fixed-point analyses for the most viable architecture(s) | ||

| − | ===Important Steps=== | + | ===Important Steps for Silicon=== |

| + | If you are doing a larger project and want to have your design to manufacturing, there are several steps you/we have to go through: | ||

# Do some first project planning. Create a time schedule and set some milestones based on what you have learned as part of the VLSI lectures. | # Do some first project planning. Create a time schedule and set some milestones based on what you have learned as part of the VLSI lectures. | ||

# Get to understand the basic concepts of convolutional networks. Create a Matlab model of the problem at hand. | # Get to understand the basic concepts of convolutional networks. Create a Matlab model of the problem at hand. | ||

| Line 69: | Line 72: | ||

* Synthesis/Backend (2 weeks) | * Synthesis/Backend (2 weeks) | ||

* Report and presentation (2-3 weeks) | * Report and presentation (2-3 weeks) | ||

| − | + | --> | |

===Literature=== | ===Literature=== | ||

| − | + | ||

* Hardware Acceleration of Convolutional Networks: | * Hardware Acceleration of Convolutional Networks: | ||

| + | ** Lukas Cavigelli, David Gschwend, Christoph Mayer, Samuel Willi, Beat Muheim, Luca Benini, "Origami: A Convolutional Network Accelerator", Proc. ACM/IEEE GLS-VLSI'15 [http://dl.acm.org/citation.cfm?id=2743766] [http://arxiv.org/abs/1512.04295] | ||

| + | ** F. Conti, L. Benini, "A Ultra-Low-Energy Convolution Engine for Fast Brain-Inspired Vision in Multicore Clusters", Proc. ACM/IEEE DATE'15 [http://dl.acm.org/citation.cfm?id=2755910] | ||

| + | ** Yu-Hsin Chen, Tushar Krishna, Joel Emer, Vivienne Sze, "Eyeriss: An Energy-Efficient Reconfigurable Accelerator for Deep Convolutional Neural Networks", Proc. ISSCC'16. | ||

** C. Farabet, B. Martini, B. Corda, P. Akselrod, E. Culurciello and Y. LeCun, "NeuFlow: A Runtime Reconfigurable Dataflow Processor for Vision", Proc. IEEE ECV'11@CVPR'11 [http://ieeexplore.ieee.org/xpls/icp.jsp?arnumber=5981829] | ** C. Farabet, B. Martini, B. Corda, P. Akselrod, E. Culurciello and Y. LeCun, "NeuFlow: A Runtime Reconfigurable Dataflow Processor for Vision", Proc. IEEE ECV'11@CVPR'11 [http://ieeexplore.ieee.org/xpls/icp.jsp?arnumber=5981829] | ||

** V. Gokhale, J. Jin, A. Dundar, B. Martini and E. Culurciello, "A 240 G-ops/s Mobile Coprocessor for Deep Neural Networks", Proc. IEEE CVPRW'14 [http://ieeexplore.ieee.org/xpl/articleDetails.jsp?tp=&arnumber=6910056] | ** V. Gokhale, J. Jin, A. Dundar, B. Martini and E. Culurciello, "A 240 G-ops/s Mobile Coprocessor for Deep Neural Networks", Proc. IEEE CVPRW'14 [http://ieeexplore.ieee.org/xpl/articleDetails.jsp?tp=&arnumber=6910056] | ||

| − | ** [http://cadlab.cs.ucla.edu/~cong/slides/fpga2015_chen.pdf] | + | ** Chen Zhang, Peng Li, Guangyu Sun, Yijin Guan, Bingjun Xiao, Jason Cong, "Optimizing FPGA-based Accelerator Design for Deep Convolutional Neural Networks", Proc. FPGA'15 [http://cadlab.cs.ucla.edu/~cong/slides/fpga2015_chen.pdf] |

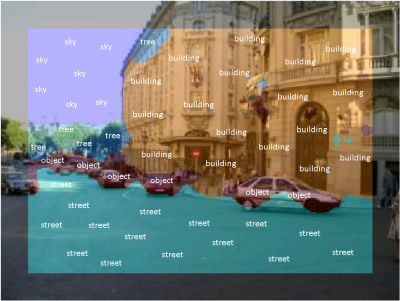

| − | * | + | * L. Cavigelli, M. Magno, L. Benini, "Accelerating real-time embedded scene labeling with convolutional networks", Proc. ACM/IEEE/EDAC DAC'15, [http://ieeexplore.ieee.org/xpl/articleDetails.jsp?tp=&arnumber=7167293] |

| − | |||

===Practical Details=== | ===Practical Details=== | ||

Latest revision as of 18:52, 12 December 2016

Contents

Short Description

Imaging sensor networks, UAVs, smartphones, and other embedded computer vision systems require power-efficient, low-cost and high-speed implementations of synthetic vision systems capable of recognizing and classifying objects in a scene. Many popular algorithms in this area require the evaluations of multiple layers of filter banks. Almost all state-of-the-art synthetic vision systems are based on features extracted using multi-layer convolutional networks (ConvNets). When evaluating ConvNets, most of the time is spent performing the convolutions (80% to 90%). We have built an accelerator for this, Origami, which has been very successful. Nevertheless, it has some limitations such as the synthesis-time fixed filter sizes and room for improvements in terms of energy efficiency. We are looking for your creativity to completely (or also incrementally) rethink the architecture to make it more versatile and energy efficient.

Status: Not Available

- 1-2 Master thesis or 1-3 semester project students

- Supervision: Lukas Cavigelli

Prerequisites

- Interest in VLSI architecture exploration and computer vision

- VLSI 1 or equivalent

Character

- 15% Theory / Literature Research

- 85% VLSI Architecture, Implementation & Verification

Professor

Detailed Task Description

A detailed task description will be worked out right before the project, taking the student's interests and capabilities into account.

Literature

- Hardware Acceleration of Convolutional Networks:

- Lukas Cavigelli, David Gschwend, Christoph Mayer, Samuel Willi, Beat Muheim, Luca Benini, "Origami: A Convolutional Network Accelerator", Proc. ACM/IEEE GLS-VLSI'15 [1] [2]

- F. Conti, L. Benini, "A Ultra-Low-Energy Convolution Engine for Fast Brain-Inspired Vision in Multicore Clusters", Proc. ACM/IEEE DATE'15 [3]

- Yu-Hsin Chen, Tushar Krishna, Joel Emer, Vivienne Sze, "Eyeriss: An Energy-Efficient Reconfigurable Accelerator for Deep Convolutional Neural Networks", Proc. ISSCC'16.

- C. Farabet, B. Martini, B. Corda, P. Akselrod, E. Culurciello and Y. LeCun, "NeuFlow: A Runtime Reconfigurable Dataflow Processor for Vision", Proc. IEEE ECV'11@CVPR'11 [4]

- V. Gokhale, J. Jin, A. Dundar, B. Martini and E. Culurciello, "A 240 G-ops/s Mobile Coprocessor for Deep Neural Networks", Proc. IEEE CVPRW'14 [5]

- Chen Zhang, Peng Li, Guangyu Sun, Yijin Guan, Bingjun Xiao, Jason Cong, "Optimizing FPGA-based Accelerator Design for Deep Convolutional Neural Networks", Proc. FPGA'15 [6]

- L. Cavigelli, M. Magno, L. Benini, "Accelerating real-time embedded scene labeling with convolutional networks", Proc. ACM/IEEE/EDAC DAC'15, [7]