System Emulation for AR and VR devices

From iis-projects

Contents

Overview

Augmented Reality/Virtual Reality wearables are gaining momentum due to their potential to revolutionize how we perceive the world. Fitting smartphone-like processing capabilities into a lightweight form factor is quite challenging. The real-time processing constraints in a limited power budget require a novel approach across the entire stack, system-level design, and optimization. These AR/VR wearable systems use multiple sensors to deliver an immersive experience through various algorithms. To ensure this immersive experience, the underlying hardware solution must possess the flexibility required to accommodate a variety of algorithms, including but not limited to VIO (Visual-Inertial Odometry), Tracking, Scene Reconstruction, Visual Pipeline and Audio Pipeline [1]. The challenge here is to have a flexible yet specialized hardware design to support different workloads under the latency, power, and area budget. Different design decisions must be made to achieve a hardware solution that meets these stringent budgets.

Status: Available

- Type: Semester Thesis/Master Thesis

- Professor: Prof. Dr. L. Benini

- Supervisors:

Project Description

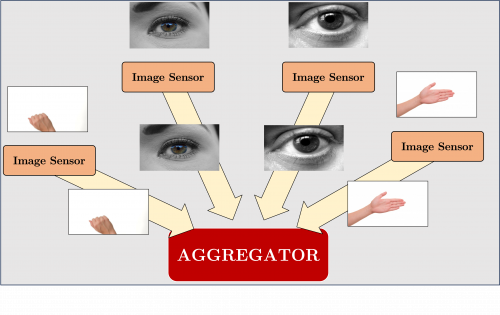

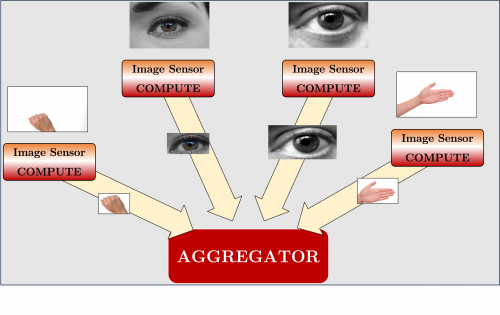

In eyeglass systems, vision is one of the important pipelines. The images generated from the multiple sensors are used for hand tracking, eye tracking, object recognition, etc. The traditional computing methodology involves capturing the images on the sensor and sending them to an aggregator(power SoC) for processing. This leads to power and latency bottlenecks to transfer the data offchip[3]. Thanks to 3D stacking, an on-sensor compute is possible. Some of the workloads can run on the on-sensor compute, and only whenever needed, the data is sent to the aggregator. The on-senor compute also has power overhead. This project explores which design decision is better for different workloads(traditional/distributed).

Character

GVSoC[2] is a highly configurable, fast and accurate full-platform simulator for RISC-V-based IoT Processors. An emulation model of one of our latest chips, Siracusa[4], is integrated into the GVSoC model. In this project, the student will

1. Extend the GVSoC emulation platform to create/add necessary modules required to support system emulation on Siracusa

2. Quantitatively assess the performance of different workloads obtained from a traditional and distributed system.

Workload Distribution

- 10% Literature research

- 55% Extending GVSoC model

- 25% Workload creation and deployment

- 10% Verification

Required Skills

To work on this project, you will need the following:

- Proficient in C++, Python

- Strong interest in computer architecture.

- Familiarity with Deep Learning and traditional DSP algorithms

- To have worked in the past with at least one RTL language (SystemVerilog or Verilog or VHDL) - having followed (or actively following during the project) the VLSI1 / VLSI2 courses is strongly recommended.

References

[1] Huzaifa, Muhammad, et al. "ILLIXR: Enabling end-to-end extended reality research." 2021 IEEE International Symposium on Workload Characterization (IISWC). IEEE, 2021.

[2] Bruschi, Nazareno, et al. "GVSoC: a highly configurable, fast and accurate full-platform simulator for RISC-V based IoT processors." 2021 IEEE 39th International Conference on Computer Design (ICCD). IEEE, 2021.

[3] Gomez, Jorge, et al. "Distributed on-sensor compute system for AR/VR devices: A semi-analytical simulation framework for power estimation." arXiv preprint arXiv:2203.07474 (2022).