Difference between revisions of "Acceleration and Transprecision"

From iis-projects

| Line 16: | Line 16: | ||

* [mailto:fschuiki@iis.ee.ethz.ch fschuiki@iis.ee.ethz.ch] | * [mailto:fschuiki@iis.ee.ethz.ch fschuiki@iis.ee.ethz.ch] | ||

* ETZ J89 | * ETZ J89 | ||

| + | |||

| + | ====Manuel Eggimann==== | ||

| + | * [mailto:meggiman@iis.ee.ethz.ch meggiman@iis.ee.ethz.ch] | ||

| + | * ETZ J68 | ||

Revision as of 09:02, 25 October 2018

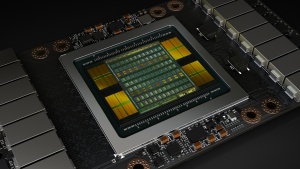

A NVIDIA Tesla V100 GP-GPU. This cutting-edge accelerator provides huge computational power on a massive 800 mm² die.

Accelerators are the backbone of big data and scientific computing. While general purpose processor architectures such as Intel's x86 provide good performance across a wide variety of applications, it is only since the advent of general purpose GPUs that many computationally demanding tasks have become feasible. Since these GPUs support a much narrower set of operations, it is easier to optimize the architecture to make them more efficient. Such accelerators are not limited to high performance sector alone. In low power computing, they allow complex tasks such as computer vision or cryptography to be performed under a very tight power budget. Without a dedicated accelerator, these tasks would not be feasible.

Contents

Who We Are

Francesco Conti

- fconti@iis.ee.ethz.ch

- ETZ J78

Stefan Mach

- smach@iis.ee.ethz.ch

- ETZ J89

Fabian Schuiki

- fschuiki@iis.ee.ethz.ch

- ETZ J89

Manuel Eggimann

- meggiman@iis.ee.ethz.ch

- ETZ J68

Available Projects

- Extending our FPU with Internal High-Precision Accumulation (M)

- Low Precision Ara for ML

- Hardware Exploration of Shared-Exponent MiniFloats (M)

- Approximate Matrix Multiplication based Hardware Accelerator to achieve the next 10x in Energy Efficiency: Full System Intregration

- Extended Verification for Ara

- Ibex: Tightly-Coupled Accelerators and ISA Extensions

- RVfplib

- Scalable Heterogeneous L1 Memory Interconnect for Smart Accelerator Coupling in Ultra-Low Power Multicores

Projects In Progress

- Fault-Tolerant Floating-Point Units (M)

- Virtual Memory Ara

- New RVV 1.0 Vector Instructions for Ara

- Big Data Analytics Benchmarks for Ara

- An all Standard-Cell Based Energy Efficient HW Accelerator for DSP and Deep Learning Applications

Completed Projects

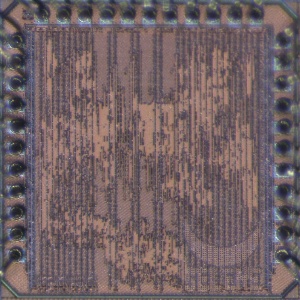

The Logarithmic Number Unit chip Selene.

- Integrating an Open-Source Double-Precision Floating-Point DivSqrt Unit into CVFPU (1S)

- Investigating the Cost of Special-Case Handling in Low-Precision Floating-Point Dot Product Units (1S)

- Optimizing the Pipeline in our Floating Point Architectures (1S)

- Streaming Integer Extensions for Snitch (M/1-2S)

- A Unified Compute Kernel Library for Snitch (1-2S)

- NVDLA meets PULP

- Hardware Accelerators for Lossless Quantized Deep Neural Networks

- Floating-Point Divide & Square Root Unit for Transprecision

- Low-Energy Cluster-Coupled Vector Coprocessor for Special-Purpose PULP Acceleration

- Design and Implementation of an Approximate Floating Point Unit